5 Simple Tricks To Turn A Good Computer Vision Model Into A State-of-the-art Model:

How do you turn a good computer vision model into a state-of-the-art model?

How do you turn a good computer vision model into a state-of-the-art model?

I’ve spent countless hours poring through research papers and Kaggle contest solutions thinking about repeatable ways to do this. Side note — Kaggle is a gold mine for finding novel techniques to use in machine learning.

After reading a lot of solutions, some common trends emerged and I’ve distilled them down to 5 things that really move the needle.

Simply put, these 5 techniques will change the way you train your next model.

A couple of quick caveats before we begin, I will not discuss:

Model Architecture choices — These are subjective and almost always, a larger model does better*

Transfer learning & hyperparameter selection — These are the defaults that everyone tries

These tricks work for all models to varying degrees. The best advice is to try them and see if they work.

Here’s a breakdown of each one:

Mixup

Mixup makes the model guess not one but two labels from a mixture of two images.

How it works:

Take two images, their labels & a random weight value

Convert the labels into their one hot representation

Train the model on the weighted average of the images & labels

Mixup primarily prevents the model from overfitting and therefore is a good regularization technique. However, since it makes the model guess two images for the price of one, it does have a tendency to improve the model’s performance.

A powerful data augmentation that boosts accuracy 📈

Label Smoothing

The typical workflow when choosing a model for a task is to first find a large enough model, make it overfit the data, and then add regularization to reduce the overfitting. Even after this, your classification model may be overconfident and overfit the training data. In this scenario, use label smoothing.

Here’s how:

Change labels to their one hot representation

Replace all 1s in the labels with values slightly less than 1 like 0.9 for example

Replace all 0s with values slightly greater than 0 like 0.1 for example

Since the model cannot be 100% sure now about any of its predictions, it’s much less likely to overfit.

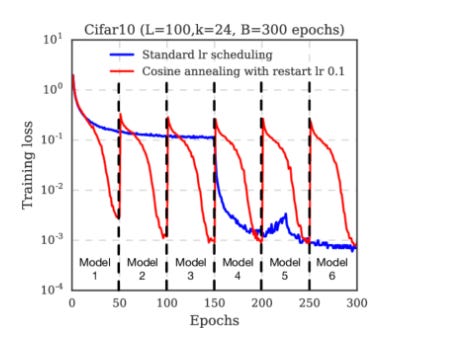

Cosine Learning Rate Decay with Warm Restarts

I said I wouldn’t debate hyperparameters here, and I stand by the claim that schedulers are hyperparameter adjacent 😉. Schedulers are really important because they help the model converge by avoiding local minima. This scheduler has all the ingredients to adjust the learning rate optimally:

Periods of high learning rate to push the model out of a local minima

Periods of low learning rate to help convergence

Periodic resets to “spark” a sleeping model into action

Small changes like choosing the right scheduler can make a huge change to how well a model trains. An interesting debate that is ongoing is whether the warm restarts are useful or not. For more details, check out this thread:

CutMix

What happens when you cut out patches from an image and replace it with patches from another image? You get an awesome yet simple technique to improve your model. This is called CutMix. CutMix combines two other techniques, Cutout, which removes entire parts of images, and Mixup, which we saw above. Simply put:

CutOut + Mixup = CutMix

One important point to remember is that you have to mix the labels proportionally to how much of each image is in the augmented image. The model generalizes better due to strong regularization.

Progressive Resizing

This is one of my personal favorites. I learned this from the FastAI course and have come back to it over and over again. Almost all models do better when the resolution of the image going in is larger. The problem is that larger images take much longer to train and often, the compute power needed to train them is beyond the reach of most practitioners. Progressive resizing solves this by:

Starting training with smaller image sizes — This allows you to train faster

Gradually increasing the resolution during training — This improves the performance

The key takeaway is that you spend a longer time training in lower resolutions and fine-tuning the model at larger resolutions.

Recap — 5 tricks that will change the way you train your next CV model

Mixup

Label Smoothing

Cosine LR Decay with Warm Restarts

CutMix

Progressive Resizing

Try these out and let me know if they worked for you. Do you have tricks that you keep going back to? I’d love to hear your approach.

🤖💪 Want more ideas to be a productive ML practitioner?

Each week, I send out a newsletter with practical tips and resources to level up as a machine learning practitioner. Join here for free →