AI Distillation #6

Personalized LLMs, MLOps 101, Diffusion Explainer, and more...

These are some excellent resources I found covering various AI topics over the past week.

Diffusion Explainer

Diffusion Explainer is an interactive tool designed to help users understand diffusion models. It provides step-by-step visual explanations of how these models work, making complex concepts more accessible for researchers, students, and practitioners. It's worth checking out to gain a deep understanding of the diffusion process.

MLOPs 101

This MLOps-Basics repo is an excellent introduction to the fundamentals of MLOps (Machine Learning Operations). It covers essential tools and practices required for deploying, monitoring, and maintaining machine learning models in production. The repository includes tutorials and examples for setting up CI/CD pipelines, version control, model monitoring, and more. This is ideal for data scientists and engineers looking to integrate MLOps workflows into their projects.

Iteration of Thought: Leveraging Inner Dialogue for Reasoning

Iteration of Thought (IoT) improves LLMs' reasoning by using inner dialogue between prompts and responses. The model refines its output through an iterative process between an Inner Dialogue Agent (IDA) and an LLM Agent (LLMA). This dynamic reasoning enhances complex tasks like multi-hop question answering and puzzle solving, outperforming existing methods like Chain of Thought (CoT).

LVCD: Reference-Based Video Lineart Video Colorization

The authors leverage contrastive learning for language-vision tasks using diffusion models. LVCD (Language Vision Contrastive Diffusion) integrates language and visual data, allowing for effective cross-modal understanding and generation. It improves image-text alignment and enhances the quality of generated content in tasks like image captioning and text-to-image generation.

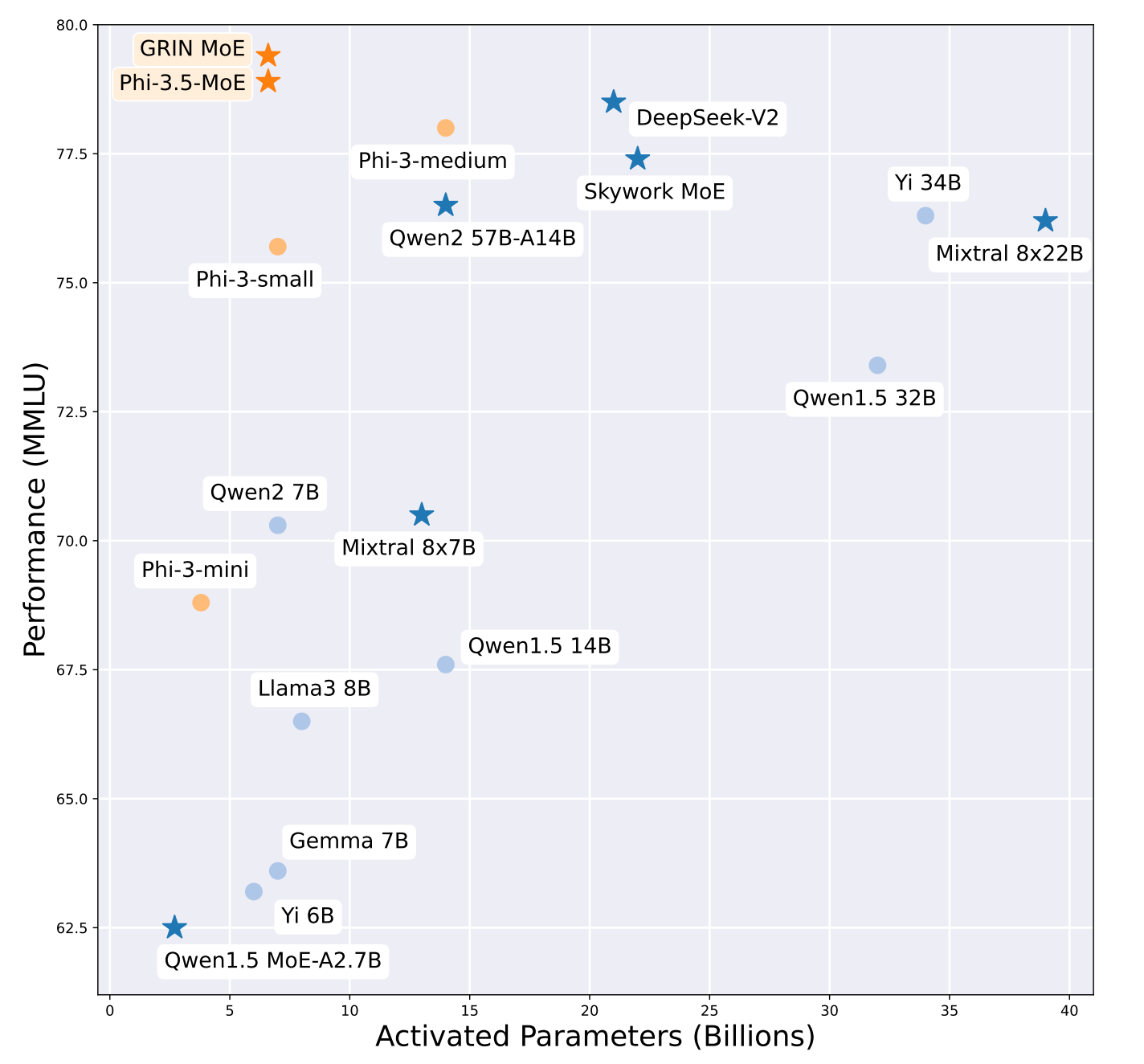

Microsoft's GRIN-MoE

GRIN-MoE improves the performance and efficiency of mixture-of-experts (MoE) models in machine learning. It utilizes instance normalization techniques for routing and better generalization across diverse tasks. It optimizes resource usage while enhancing model accuracy, particularly for large-scale tasks where computational efficiency is crucial.

LLMs + Persona-Plug = Personalized LLMs

Baidu proposes Persona-Plug for personalizing LLMs without fine-tuning the models themselves. Their method uses a plug-in user embedder module. The module creates a user-specific embedding by modeling historical context, allowing the LLM to generate more personalized outputs based on user preferences. Check out the paper for more.

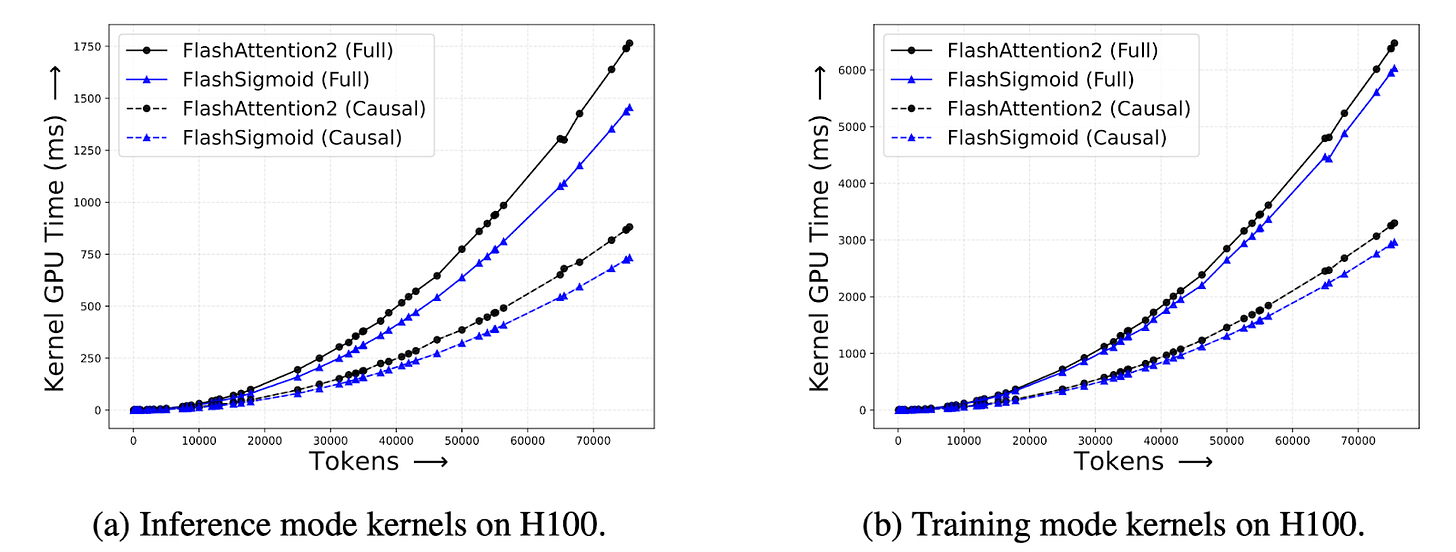

Sigmoid Attention Makes Transformers Go Brrr…

Apple's new paper revisits sigmoid-based attention mechanisms in transformers as an alternative to softmax. The authors show that sigmoid attention can serve as a universal function approximator with improved stability during training. Additionally, they introduce FLASHSIGMOID, a more efficient implementation for hardware acceleration that achieves significant speed-ups on GPUs. Check out the project page for the code and paper.

Image Matching: Local Features & Beyond

In this four-hour CVPR workshop, we get a ringside seat to everything in the world of feature matching, from 3D reconstruction to attention-based matches. The second half of the workshop covers a 2023 feature matching challenge from Kaggle, and you're sure to find inspiration from the presenters. If you want to sample specific parts of the video, a TOC appears at roughly 56 seconds.