AI Distillation #7

Advanced NLP Course, OmniModal models, LLavA for 3D, Generative AI for RecSys, and more...

These are some excellent resources I found covering various AI topics over the past week.

MaskLLM: Learnable Semi-Structured Sparsity for LLMs

MaskLLM introduces learnable semi-structured sparsity to LLMs, enabling efficient pruning while maintaining performance. Using Gumbel Softmax sampling, it learns sparse patterns that help reduce computational overhead during inference, with masks that can transfer across domains for lossless compression. For more details, check out the project page.

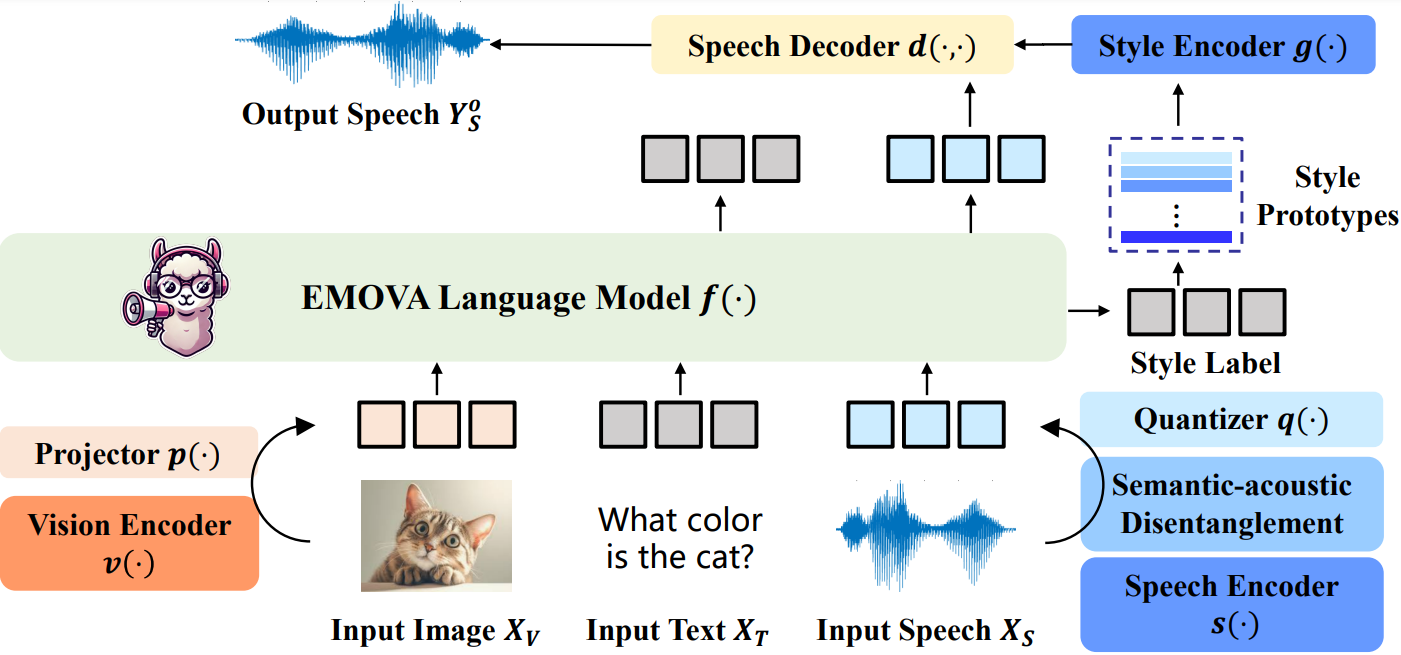

EMOVA: Empowering LLMs to See, Hear & Speak

EMOVA (Emotionally Omnipresent Voice Assistant) empowers language models to handle text, vision, and speech end-to-end, adding vivid emotional expression to spoken responses. It integrates visual and acoustic inputs to generate emotionally nuanced interactions. EMOVA also features flexible control over speech styles like emotions and pitch, making it a step forward in natural multimodal communication.

EMU: Next Token Prediction is All You Need

Emu is a multimodal foundational model developed by BAAI. Designed to handle vision and language tasks, it leverages a unified transformer architecture to process diverse inputs like videos, images, and text for seamless multimodal understanding and reasoning. Emu focuses on enhancing general capabilities and accessibility for researchers and developers.

LLaVA-3D: Empowering LLMs with 3D Awareness

LLaVA-3D extends the LLaVA framework for 3D vision-language tasks by integrating 3D object representations with visual question answering. The model allows users to interact with 3D scenes using natural language, providing capabilities for understanding and answering questions about spatial relations and object properties. LLaVA-3D combines visual cues from both 2D and 3D data to enhance scene comprehension.

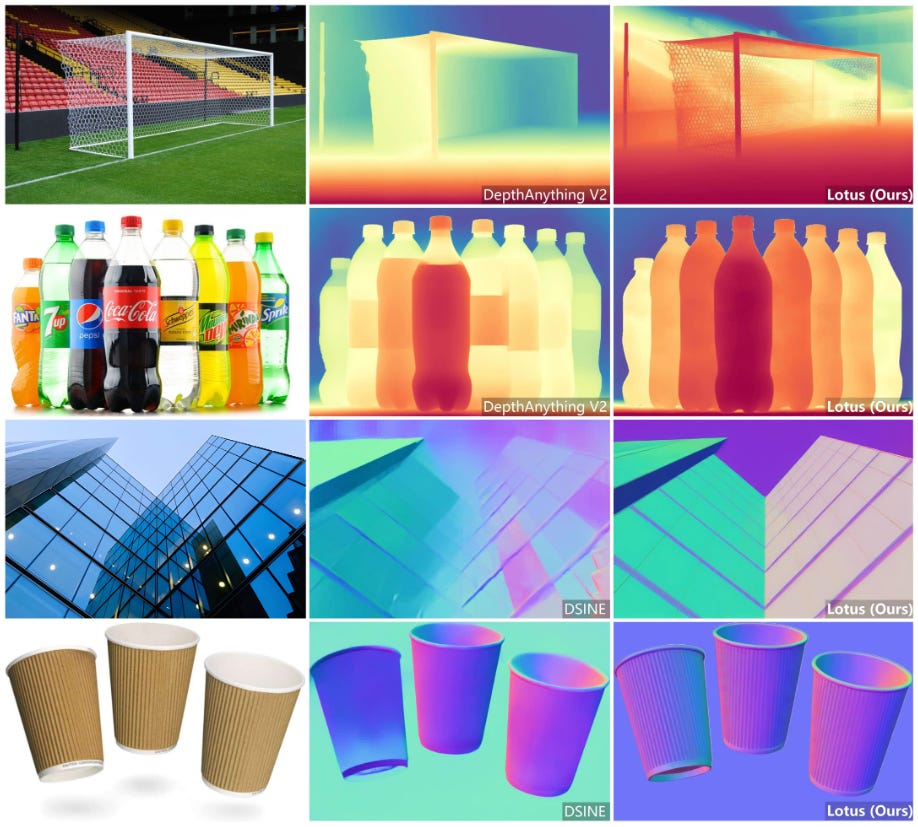

Lotus: Diffusion-Based High-quality Dense Prediction

Lotus is a diffusion-based model for dense geometry prediction tasks like depth and normal estimation. It uses a simplified single-step process and directly predicts annotations, making it faster and more accurate. It achieves state-of-the-art performance on zero-shot tasks with minimal training data, showing promise for efficient, high-quality, dense predictions.

Recommendation with Generative Models

This book explores how generative models like GANs, VAEs, and transformers can improve recommender systems by enhancing accuracy, diversity, and personalization. It introduces a taxonomy of deep generative models—ID-driven, large language, and multimodal models—and highlights their role in generating structured outputs, handling multimedia content, and enabling more dynamic recommendations in domains such as eCommerce and media.

Games, Goats, and General Intelligence

In this interview, DeepMind's Research Engineering Lead, Frederic Besse, discusses important research like SIMA (Scalable Instructable Multiworld Agent) and what we can expect from future agents that can understand and safely carry out a wide range of tasks - online and in the real world.

Interpreting the Weight Space of Customized Diffusion Models

In this paper, the authors explore how the weights of fine-tuned diffusion models represent a latent space of identities. They use over 60,000 personalized models to create a weight space named weights2weights (w2w), allowing applications like sampling new identities, editing attributes (e.g., adding a beard), and inverting images to reconstruct identities. The paper highlights w2w as an interpretable space for manipulating identity in diffusion models.

CMU's Advanced NLP Course

This CMU course for Fall 2024 offers a comprehensive overview of advanced NLP concepts, including deep learning, machine translation, and language generation. It includes lectures, assignments, and resources designed for students with a foundational understanding of NLP and machine learning. Check out the course page for code and slides and the playlist for the lecture videos.