AI Distillation #8

Meta's MovieGen, a No BS guide to LLMs, were RNNs all we needed and more...

These are some excellent resources I found covering various AI topics over the past week.

Announcement: I'll be running a workshop on GPT models with Charlie Guo, who writes the excellent Artificial Ignorance newsletter. If you're interested, sign up here.

Meta's MovieGen

Meta's MovieGen generates videos and audio from simple text prompts, enabling high-definition video creation, editing, and personalized content. It can transform existing videos with text descriptions, create personalized videos using uploaded images, and even generate matching soundtracks and effects, making it an all-in-one tool for creating and editing multimedia content. Additionally, there’s a 92-page technical report with actual information. Honestly, this is some jaw-dropping work from Meta's AI team.

EEVR: Exact Volumetric Rendering for Real-time View Synthesis

This paper proposes a real-time exact volume rendering technique using ellipsoids, outperforming splatting-based approaches like 3D Gaussian Splatting (3DGS). It achieves smooth, artifact-free renderings, supporting features like defocus blur and fisheye camera distortion. Tested on the Zip-NeRF dataset, EEVR provides sharper, more accurate results for large-scale scenes, reaching around 30 FPS at 720p. It’s designed for scenarios demanding precise blending and visual accuracy in real-time view synthesis. Imagine how video games in the future might look with techniques like this!

Deep Generative Models Course

Stanford's CS236 explores probabilistic foundations and learning algorithms for deep generative models, including VAEs, GANs, and normalizing flows. It also covers applications in fields like computer vision, NLP, reinforcement learning, graph mining, reliable machine learning, and inverse problem-solving. The course will help build a deep understanding of how generative models learn from complex data.

OSSA: Unsupervised One-Shot Style Adaptation

This paper introduces a new unsupervised method for domain adaptation in object detection using just a single target image. By adapting style statistics and applying them to a source dataset, OSSA generates diverse styles to close the domain gap. It achieves state-of-the-art results in challenging out-of-distribution scenarios, like weather or sim2real adaptations, without requiring labeled target data.

The Anti-hype LLM Reading List

is a curated set of resources focused on understanding large language models without the hype. It includes foundational papers and articles covering everything from early models to state-of-the-art architectures. It provides realistic, insightful material for those working with LLMs, emphasizing practical knowledge over marketing claims.

A Survey of Low-Bit LLMs

This survey explores the use of low-bit quantization for LLMs– the basics, systems, and algorithms to reduce their memory and compute requirements. It covers various quantization techniques and training and inference systems and discusses challenges and future directions for making LLMs more efficient. If you're trying to develop LLMs for resource-constrained devices, this might be the survey to read.

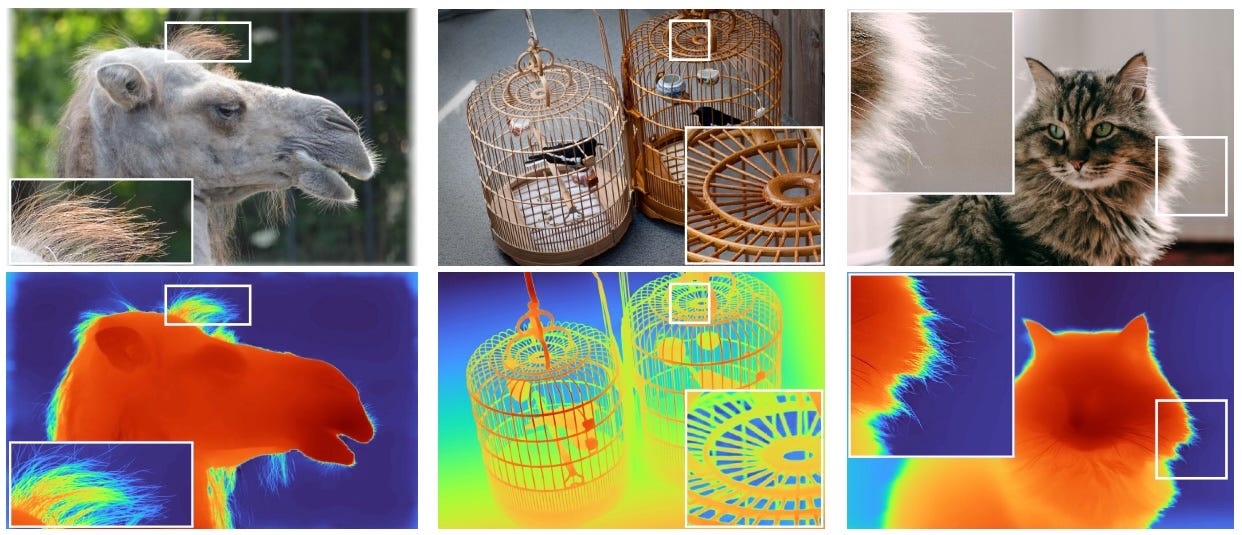

Apple's Depth Pro

Depth Pro delivers sharp, high-resolution monocular metric depth maps in under a second. Utilizing an efficient multi-scale vision transformer, it combines real and synthetic datasets to provide high metric accuracy, even in boundary details. This fast approach allows for real-time depth estimation, reaching 2.25-megapixel output in just 0.3 seconds. The model is available for reference implementation and use.

Were RNNs All We Needed?

This paper revisits classic RNN architectures like LSTMs and GRUs, proposing simplified versions (minLSTMs and minGRUs) that remove the need for backpropagation through time. These minimal models are fully parallelizable during training and use fewer parameters. They achieve competitive results with state-of-the-art sequence models, suggesting that old RNNs, with some tweaks, still have potential for modern applications. Were RNNs all we really needed? Read the paper to find out!