From Art Thief to AI Artist: The Variational Autoencoder Transformation

The journey from autoencoders to stable diffusion

Last week, we saw how plain autoencoders, while excellent for compressing data, aren't great at generating new data. That's because they're hardwired to reconstruct the input, not to produce new samples. VAEs, on the other hand, are the OG image generators. I'll be splitting the VAE into two posts. This week’s post will cover the intuition with some results to whet your appetite. The next one will cover the math, code, and tricks the VAE uses to generate new data. Let's get started!

This Week on Gradient Ascent:

The artistic autoencoder 🎨

[Consider reading] Segment anything? 🗺️

[Check out] How you can train your own large models 🏃

[Learn from] A recipe for training good generative models 🧑🍳

The Canvas Conspiracy: The Artist's Guide to Variational Autoencoders

Once upon a time, a budding artist named Vincent lived in a quiet little town called Latentia. Latentia was renowned for its creativity. Many extraordinary artists had apprenticed here before moving to the big city to build stellar careers in art.

Vincent came from a long line of art forgers. His father, Arne Englebert, was a master at forgery. He could replicate paintings flawlessly down to the tiniest detail. But that was all he could do. Arne trained his son in art from a young age, and soon, he, too, could recreate any painting blindfolded. But unlike his father, Vincent Arne Englebert wasn't happy with forging existing art. He wanted more.

The Apprentice: Building a Master's Vocabulary

Vincent diligently studied the works of artistic geniuses. Soon, he started understanding the essence of great works of art. He absorbed their techniques, their choice of colors, brush strokes, lines, and composition. Finally, he learned the underlying patterns and distribution of styles that made their art unique.

The Artistic Breakthrough: The True Essence of Art

As Vincent honed his craft, he realized that just learning the great masters' styles would make him yet another accomplished forger. To truly master the essence of art, he needed to express his creative voice. A delicate balance between the two was vital. Whenever he painted, he would first follow the style of the great masters, using the fundamental principles he had learned through his studies. Then, he would add a touch of randomness – a subtle twist to the composition, an unexpected stroke of color, or a slight alteration in perspective – to make his creations truly unique. He became a true creator by infusing his creative expression with the framework of legends' past—an artist.

The Legacy: Vincent's Impact on the Art World

Vincent's relentless pursuit of artistic mastery eventually bore fruit. His newfound ability to create stunning, original pieces that captured the essence of the great masters left the art world in awe. His innovative style and technique became the talk of the town, and soon, Vincent's masterpieces were displayed in prestigious galleries and museums.

Deconstructing Vincent, the VAE

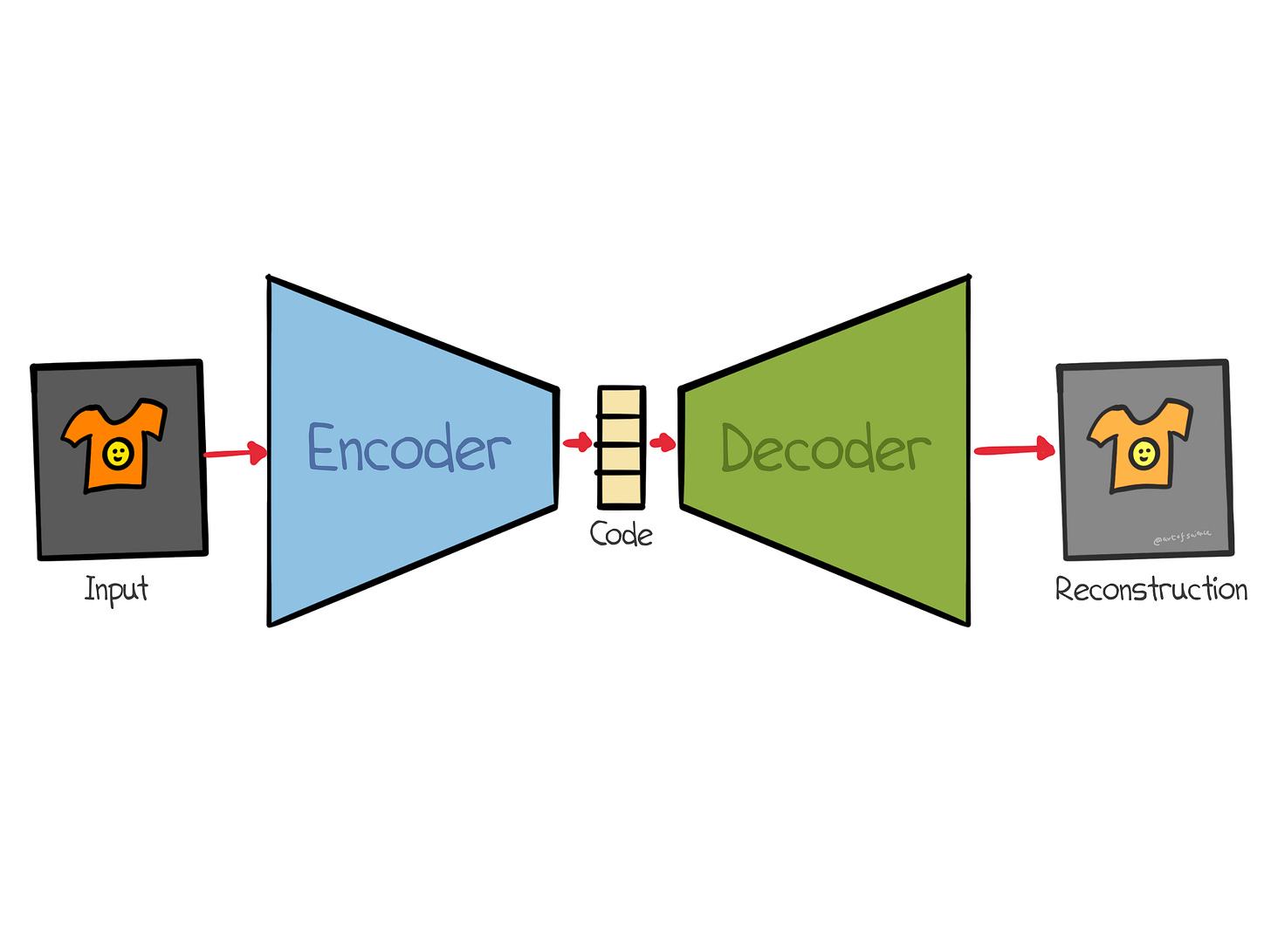

In case you haven't already made the connection1, Vincent's journey is very similar to that of a Variational Autoencoder (VAE). A VAE also consists of an encoder and decoder like a regular autoencoder. However, unlike traditional autoencoders (like Vincent's dad), which can only learn to recreate input data, VAEs are designed to model the underlying probability distribution of the data. This allows them to generate new, original samples.

What does this mean? Instead of learning a deterministic mapping between the input data and the latent space, as traditional autoencoders do, VAEs learn to represent the data as a distribution. This enables them to generate new, realistic samples from this distribution.

In VAEs, the encoder network maps the input data to two vectors: the mean (µ) and the standard deviation (σ). These vectors parameterize a Gaussian distribution in the latent space.

The decoder network then samples from this distribution to generate new data points. By learning the parameters of the distribution in the latent space, the VAE effectively captures the underlying probability distribution of the input data.

Traditional autoencoders aim to minimize only the reconstruction error, forcing them to replicate the input data faithfully. Thus, they do not explicitly model the probability distribution of the data and, therefore, cannot generate new data points.

VAEs have a two-part loss function. Vincent learning to balance the fundamentals with his creative expression is akin to this.

The first part, the reconstruction loss, ensures that the generated samples resemble the original data. The second part, called the KL (Kullback-Leibler) divergence, ensures that the learned distribution stays close to a standard Gaussian distribution.

This dual nature of the VAE loss function can be considered Vincent's artistic compass. The reconstruction loss represents his ability to understand and reproduce the techniques of the great masters. In contrast, the KL divergence represents the creative freedom that allows him to generate entirely new and captivating pieces of art.

Below is a VAE I trained on the Fashion MNIST dataset. Here is how it reconstructed some randomly chosen images. As you can see, it's pretty good but not perfect. But, as with everything in real life, that's ok!

What is the KL divergence all about? This component of the loss function helps with several things.

First up, it encourages a structured latent space. A VAE can only produce new samples as good as the latent representation it learns. The KL divergence term measures the difference between the learned latent distribution and target distribution. By minimizing the KL divergence, VAEs are encouraged to create a well-structured latent space with smooth transitions between data points. This allows for a more effective generation of new samples.

Here is an interpolation result from the VAE I trained. I made it moonwalk from an image of a pant to a boot. Notice how smoothly it traverses the latent space. The result even changes to a sandal before becoming a boot.

Second, it helps solve the overfitting problem. Traditional autoencoders are prone to overfitting because they can learn to simply copy the input data to the output without extracting meaningful features. By introducing the KL loss in the VAE loss function, VAEs are encouraged to extract the most essential features from the input data, which helps prevent overfitting and leads to better generalization.

Third, the KL divergence acts as a constraint, ensuring that the latent space does not become overly complex and forcing the model to capture only the most important features of the input data. Look back at the VAE reconstruction image above. Look at the penultimate result. Can you see the ghost of a pant scaring the sandal? The VAE was prevented from memorizing the input thanks to the KL divergence term I used.

The story of Vincent reminds us of the endless possibilities that arise when we push the boundaries of creativity and innovation. I hope this got you excited about VAEs. In the next edition, we'll review the code, the math, and all the seemingly gnarly stuff that makes VAEs tick.

Resources To Consider:

Meta's New Segment Anything!

Paper: https://bit.ly/43etRtr

Code: https://github.com/facebookresearch/segment-anything

Given the rapid progress in large language models, it can be easy to forget that computer vision still needs to be solved. Meta's new Segment Anything Model (SAM) takes a big step towards solving it. SAM has been trained on 11 million images and 1.1 billion masks. As the name suggests, it can segment anything and more! I'll be covering this work down the line, but for now, check out the code and paper.

Practical Guide for Training Large Models

Link: https://wandb.ai/craiyon/report/reports/Recipe-Training-Large-Models--VmlldzozNjc4MzQz

Boris Dayma trained a miniature version of Dall-E (called Dall-E mini / Craiyon) last year and has captured all his learnings in this excellent guide. Definitely check it out!

A Recipe for Training Good Generative Models

Link: https://datasciencecastnet.home.blog/2023/04/06/a-recipe-for-training-good-generative-models/

John Whitaker is an expert at finding tricks to produce amazing generative images. He's put together a recipe for training excellent generative models. I'd highly recommend reading it. It's short but packed with information.

I blame my storytelling ability, not your patience :D

Another great analogy, Sairam. I am fascinated with the comparisons.

Thanks for this series, Sairam. Are you planning to include BERT and transformers as well?