This week's edition is brought to you by Squeez Growth

Get a FREE Database of 9,000+ AI Toolkits and 4,418+ Business Resources that will save you hours of work.

Elevate your workday with this carefully curated database that 32,000+ professionals trust to help them rapidly innovate in their business and personal lives.

This Database is packed with new AI Tools, business ideas, and money-making opportunities that will effortlessly speed up your daily business workflows.

(Limited access: FREE for First 100 people only)

Happy New Year!

A famous saying goes, "There are decades where nothing happens, and there are weeks where decades happen." This past year feels like the latter.

So before we jump headfirst into 2024, here's a curated list of resources you can use to catch up on most of the important action from 2023. I'll be back with tutorials, deep dives, and more from next week.

5 Research Papers Worth Grokking

Dozens of excellent papers were published last year, so it’s hard to pick the “best”. This list is subjective, but I've included the research I found fresh and exciting. I've also tried to balance Computer Vision and NLP, which was hard, given that I restricted myself to just five papers.

Segment Anything Model (SAM)

The Segment Anything Model (SAM) from Meta is designed for prompt-based operation, enabling it to adapt zero-shot to new image distributions and tasks. SAM's architecture supports flexible prompts and real-time mask computation, which is crucial for interactive use. The authors created the SA-1B dataset to train SAM, the largest of its kind, with over 1 billion masks from 11 million images. SAM is excellent at generating high-quality masks crucial for diverse applications like medical imaging, robotics, AR, etc.

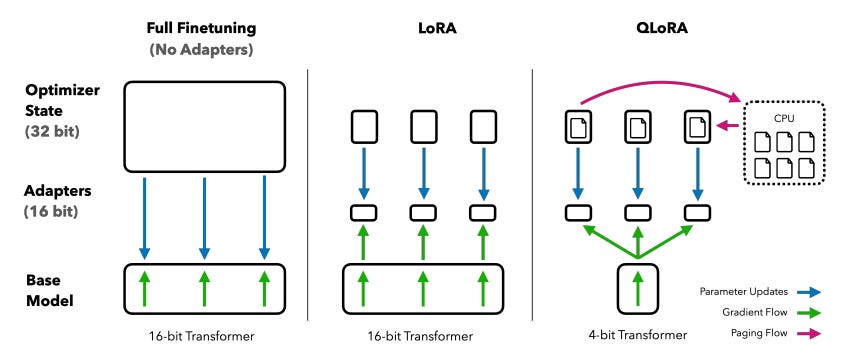

QLoRA

QLoRA (Quantized Low-Rank Adapters) is a novel finetuning approach for large language models (LLMs). It significantly reduces memory usage, enabling the finetuning of a 65B parameter model on a single 48GB GPU while preserving full 16-bit finetuning task performance. Low-Rank Adapters (LoRA) allow efficient finetuning of LLMs through low-rank weight matrices. QLoRa quantizes these matrices through a new data type called 4-bit NormalFloat, double quantization, and paged optimizers, allowing you to fit larger models comfortably with much less memory and resource usage.

LIMA

LIMA explores the effectiveness of fine-tuning large language models (LLMs) with a small set of high-quality training data. The authors train a 65B-parameter LLaMa language model named LIMA on just 1,000 curated prompts and responses, demonstrating that it performs remarkably well, even compared to models trained with extensive reinforcement learning and larger datasets.

I wrote about LIMA in depth here: https://open.substack.com/pub/artofsaience/p/the-tiny-giant-thats-shaking-up-ai

Drag your GAN

In this paper, the authors explore a powerful way to control GANs. A user can precisely deform an image by simply "dragging" points on an image. In fact, you can manipulate shape, pose, expression, and layout with ease. DragGAN accomplishes this via two main components: a feature-based motion supervision for driving handle points toward target positions and a new point-tracking method that leverages GAN's features to localize the handle points.

BloombergGPT

BloombergGPT is a 50 billion parameter language model specifically designed for the financial domain. This model is trained on a unique dataset combining Bloomberg's extensive financial data with general-purpose datasets, creating a corpus of over 700 billion tokens. BloombergGPT demonstrates superior performance on financial tasks while maintaining competitive results on general language model benchmarks. This mixed dataset training approach differentiates it from previous models trained exclusively on domain-specific or general data sources. The paper also discusses the model's architecture, tokenization process, and the challenges of building such a model. I love that the authors shared their experiences and learnings from building this model. Here's a companion video worth watching as you read the paper.

4 Articles Worth Reading:

Many excellent AI practitioners share their knowledge here on Substack. I've chosen four of my absolute favorite articles from the past year. I highly recommend reading these for an in-depth understanding of various topics.

Basics of Reinforcement Learning for LLMs

provides an in-depth overview of reinforcement learning (RL) and its application in training large language models (LLMs). Despite the effectiveness of RL, particularly reinforcement learning from human feedback (RLHF), many AI researchers tend to focus on supervised learning strategies due to familiarity and ease of use. This article demystifies RL by covering basic concepts, approaches, and modern algorithms for fine-tuning LLMs with RLHF. Practical Tips for Finetuning LLMs using LoRA

In this article, the amazing

offers valuable insights into the efficient training of custom LLMs using LoRA. He covers a lot of ground, from consistency in training outcomes to the feasibility of fine-tuning models on limited-memory GPUs. What I love about this article is that it comes from first-hand experiments. Bookmark this article and return to it when finetuning LLMs with adapters.State-Space LLMs: Do we need Attention?

explores the potential of non-attention architectures in language modeling, particularly models like Mamba and StripedHyena. These models challenge the traditional attention-based approaches like those in Transformer models, offering a different way to handle long-context sequences through Recurrent Neural Networks (RNNs) and state-space models (SSMs). The research community is actively trying to overcome the quadratic complexity of attention, so reading this article gives you a good grounding on the alternatives as well as their strengths and weaknesses. Your Data is Not Real- A Case for Complex Valued NNs

discusses the potential and challenges of Complex Valued Neural Networks (CVNNs). CVNNs are advantageous for processing phasic data and can empower fields like signal communications, healthcare, deep fake detection, and acoustic analysis. They offer improved performance, stability, and expressiveness over Real-Valued Neural Networks (RVNNs). However, CVNNs aren't perfect and have a few limitations. This is a lovely read into the little-known world of complex-valued networks. Side note: Devansh has a knack for writing about topics that aren't in the spotlight. 3 Predictions for 2024:

While no one can truly predict the future, these are three of my1 predictions for 2024.

1. Developer Productivity on the rise:

As more powerful multimodal agents emerge, we'll see a massive spike in developer productivity. Engineers will be able to get more done, whether it's writing and shipping code or creating presentations and proposals. Prototyping designs will become at least 2-3x faster as these models can map vague design docs and diagrams into MVPs. I think this will extend to other professions that require knowledge workers.

2. AI in your pocket:

As LLMs become larger and training and deploying them becomes more resource-intensive, Small Language Models (SLMs) will take center stage. We'll see a proliferation of SLMs small enough to run on mobile devices, allowing you to leverage the power of AI entirely on-device. By the end of the year, we might see an SLM that's almost as capable as GPT-4 run natively on our phones.

3. AI Percolation and Collaboration:

We're going to see AI-first solutions emerge in diverse fields like cinema, retail, physics, medicine, and others. We'll see interdisciplinary collaboration and new skill sets emerge for individuals working in the intersection of these fields. I'm willing to bet that we'll see a Hollywood-grade movie use AI for 90% of the SFX by the end of the year.

2 Videos Worth Watching:

A Hacker's Guide to Language Models

In this video, Jeremy Howard, the co-founder of fast.ai, covers everything you need to know about language models. Jeremy co-invented ULMFiT, the transfer learning approach for LLMs that we take for granted today. This is a must-watch for any practitioner interested in language models.

Let's Build GPT from Scratch

I don't need to introduce Andrej Karpathy, but it's safe to say that this is one of the best videos on building a language model like GPT. Through this process, you'll learn the model's internals and make connections between theory and practice.

1 Course Worth Taking:

You might be considering a number of courses to learn AI. In my opinion, the fast.ai course is the only course you need to start with. While it's just under two years old, it will give you the foundations to learn and grow as a practitioner. Check out the course here: https://course.fast.ai/

These are my opinions and views and don't represent those of my employer.

Appreciate the shout <3

Thanks for the shoutout :), the other three are great too