Hang Ten on the AI Wave - Gearing up for 2023

Your guide to staying afloat during an innovation tsunami

Happy New Year! It’s been a whirlwind 2022 and I hope your 2023 has started well. I wish you all success and happiness in this year ahead. Before we dive into this week’s content, a short update. Going forward, you’ll find each new edition in your inbox on Thursday (or Friday depending on where you’re based). I’ve found it easier to write and edit considering both my work schedule and a little human who competes for my attention. Alright, let’s dive in.

This Week on Gradient Ascent:

Keeping up with the AI Tsunami 🌊

[Consider reading] LLMs as corporate lobbyists 🧑⚖️

[Consider reading] New AI model plays doctor 🧑⚕️

[Check out] A blazing-fast image generator 🎨

[Must read] How copilot works 👮

[Definitely use] Code your own GPT 🧑💻

Hang Ten on the AI wave- Tips for riding the crest of innovation in 2023:

Perched safely on jagged cliffs, you stare at an endless expanse of blue. The wind howls in your ears, the air freezing cold, thick with the smell of salt and kelp. As you dream of a cup of hot cocoa teeming with spongy smores, a loud crash awakens you from your reverie. A wall of water, about the height of a mid-sized apartment, has come crashing down. Just moments before, a speck of yellow stood delicately balanced atop this shapeshifting behemoth - That yellow blip now appears to have merged into the bottomless labyrinth below.

You search frantically, squinting your eyes for the tiny human, fearing for his safety. A few breathless minutes later, you hear the crowd roar with appreciation as the surfer emerges victorious from the depths below. How on earth did he do that? Why does he put himself through that?

Big wave surfing's no joke. Looking at the pros, I often wonder if they have a secret technique or sixth sense when it comes to dominating the tempest. Turns out they don't. They are just masters at "reading" waves.

The ability to read waves is what separates the masters from the amateurs. Does that mean they're born with this gift? Heck, no!

Amateurs called it genius. Masters call it practice. ~ Thierry Henry

It took these masters years of practice to get to where they are. But what if we don't have the luxury of time?

We practitioners deal with the biggest waves of them all. AI. Change comes thick and fast. Yesterday's cutting-edge is today's fossil. It's easy to feel left behind. It's easy to be swept away by this current, drowning in a sea of never-ending innovation.

You're not alone. All of us are on the same metaphorical boat surfboard. Anyone who says otherwise is either lying or has built a ChatGPT bot to constantly ingest new research and update themselves on a daily basis.

So grab your sunscreen and board. Let's paddle together into the ocean and figure out how we can ride the crest of the AI wave. To master "reading" the AI wave, there are some practices you can adopt.

Filtering the firehose

The challenge that a lot of us face is just keeping up with new developments. The volume and the velocity of new research induces so much FOMO that it can be easy for you to stop and ask "why should I even bother?".

But, spending time curating and filtering all the sources of your information diet makes it much more manageable to keep up with what matters to you. There're two simple ways to do this:

Find researchers and practitioners who share relevant work on social media. While following them might lead to their posts being drowned in your news feed, I've found that creating a list works great. For example, here's a Twitter list I created to follow accounts that share interesting work:

https://twitter.com/i/lists/1558577673160388608Newsletters are increasingly becoming more relevant in this day and age - They come in explanatory, curation, or hybrid formats. Subscribing to a few good newsletters can go a long way in improving your content diet - they do the curation for you. But, the limitation of this approach is - they do the curation for you. Choose wisely.

Lean learning

The word on the street is that you have to keep learning and expanding your skills. It's much easier said than done. We live in an age of abundance. There are too many courses, books, and tutorials on every topic. If you're not careful, you can find yourself stretched too thin. This was my story in 2018. With the growing popularity of deep learning, new courses and contests were sprouting up from the ground almost every other day. Completely disregarding the fact that a day only has 24 hours, and that I had a full-time job, and family duties, I signed up for too many. The result? I was getting nowhere and had my hands in too many jars.

Here's what worked for me. I created a list of three questions for anything I wanted to learn in machine learning:

Is it relevant to what I'm working on? If so, is it better than what I have already?

Do I have the time for this now?

Will it get me to where I want to go more efficiently?

You'll be surprised how many of your "amazing" resources vanish because you can't find justifiable answers to at least one of these questions. The same applies to research papers too. There were 18,400 papers on Vision transformers published in 2022. Think you can read them all?

If a learning resource passes all these questions, use it earnestly. At the end of the exercise, reflect on whether it was beneficial and what was missing. That'll help you find the next starting point for your learning.

The key takeaway here is to ignore trends (I know it's really hard) and focus on what's relevant for you.

Building and Collaborating

I'm a huge proponent of learning by doing. So if you find a cool application that's using the latest and greatest language model for example, try to build a scaled-down version of that yourself. It's very hard these days to replicate research at the same scale - some of these results take days on giant server farms. Most of us don't have that luxury.

Hackathons and online communities are great venues to find like-minded people. You'll learn from each other, and better yet, will have companions on what seems like a lonely struggle. I've been amazed at how much I've learnt from others simply through osmosis. Kaggle, specifically, its discussion forum is particularly fantastic for this. I've also had luck with some Slack communities (TWIML), Discord servers (HuggingFace), and course forums (FastAI) too. The biggest advantage of going down this route is that you find new research as you need it.

Focus on what you need, ignore the rest.

Broken crystal ball

It's easy to get caught up in hype and trends, but remember that no one can predict the future. Don't believe me? Have you seen a GAN paper in recent times? Before 2022, almost all generative techniques were GAN based. Today, we use diffusion.

Researchers at MIT thought computer vision could be "solved" in two months and wrote a research proposal to solve it as a summer project with a couple of interns. It's taken over 50 years since that proposal and we're finally closer to doing it now than ever before.

"There are decades where nothing happens, and there are weeks where decades happen.” ~Lenin

Just like you can't surf every wave in the ocean, you can't read and absorb every new piece of research out there. Practice reading the waves. Take some risks and catch a big one or two. Even if you get wiped out by one, there's always one right behind that you can ride. Focus on what matters to you and enjoy the ride!

Resources To Consider:

If LLMs were lobbyists…

Paper: https://arxiv.org/abs/2301.01181

Code: https://github.com/JohnNay/llm-lobbyist

Language models are increasingly being used for interesting use cases. This researcher decided to see how good GPT (text-davinci-003) is as a corporate lobbyist. Specifically, he used this model to see if proposed U.S Congressional bills were relevant to specific public companies. Turns out that the model does a pretty decent job of predicting the most common outcome of irrelevance. What's also interesting in this paper is that the previous version of the model (text-davinci-002) performed terribly at this task - indicating that as a model's language understanding capabilities improve, so might its ability to perform these types of tasks.

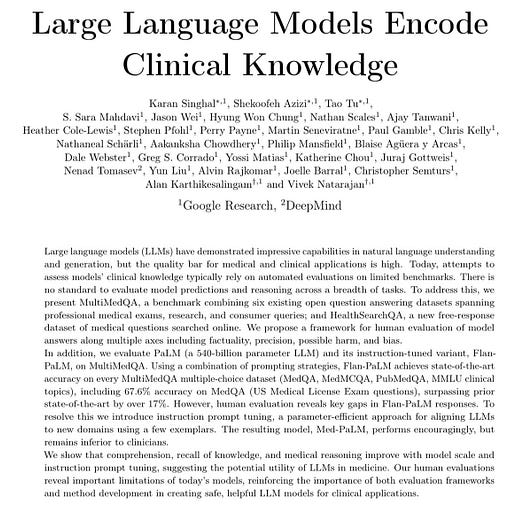

Google & DeepMind see if AI can play doctor

Paper: https://arxiv.org/abs/2212.13138

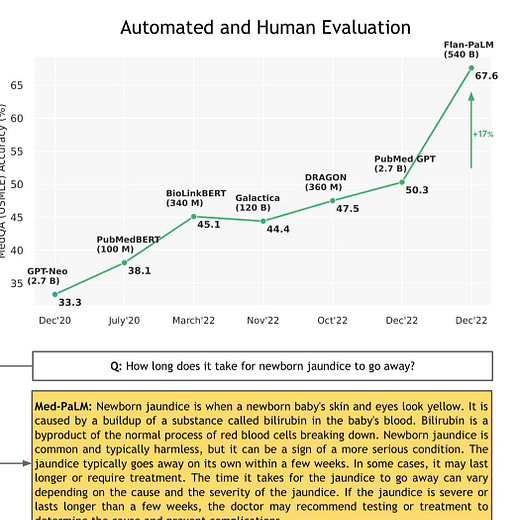

Google and DeepMind have created a new benchmark called MultiMedQA. This combines six existing open question answering datasets on topics like professional medical exams, research, consumer queries, and so on. Additionally, they also propose a new framework that humans can use to evaluate a language model's answers on axes like factuality, precision, bias, and possible harm.

When they evaluated the instruction-tuned variant PaLM (a 540 billion parameters LLM), Flan-PaLM, on this benchmark, they found that it achieved state-of-the-art results. But, it failed on the human evaluation framework they proposed. To fix this, they created a new variant Med-PaLM using a parameter efficient approach. This performed much better, but, is still inferior to clinicians.

The new sheriff in generative town

Project page: https://muse-model.github.io/

There's a new diffusion model in town, Muse. It achieves state-of-the-art results while being far more efficient (2-10x faster than prior work!). The reason this is worth checking out is that it will speed up image generation significantly which is currently a bottleneck for existing generative models.

How Copilot works

Github's Copilot makes coding easier by generating code based on prompts (partially written documentation or code). This excellent blog post dives deep into how Copilot works and what it takes to build such a system. This is a must-read to understand the amount of work it takes to build a system like this.

Code up your own GPT 🧨

Code: https://github.com/karpathy/minGPT

Andrej Karpathy has released a 300-line version of GPT. This is a fantastic resource that echoes what I wrote above about building a smaller-scale version of existing systems. Go through this repository and learn how to build your own GPT. I also found the readme in the repository really useful. This is a fantastic resource for anyone interested in LLMs.

What did you use for these images? Love them.

I love the surfing metaphor, Sairam! And keeping up with new developments in any domain is so time-consuming for all. Knowing how to filter well has become super important.