Harry Potter and the Chamber of Embeddings

A deep dive into the swiss army knife of machine learning

You might be wondering why this edition is showing up in your inbox on a Monday. Well, pesky sniffles took over the household, and safe to say that I didn’t have Madam Pomfrey’s magical solutions to help me recover sooner. So, thank you for understanding. I value your time and attention.

In other news, I have two big surprises coming up for you in the next couple of weeks. Both of these are something that I’ve been working hard on behind the scenes and I can’t wait to share them with you. But you’ll have to wait until Thursday to know what they are! Let’s crack on with our regularly scheduled programming.

This Week on Gradient Ascent:

An embedding special! 🔤

Accio insights: The marauder's map of the ML world

Somewhere in a parallel universe…

"Harry," said Ron. "Say something. Something in Embedtongue."

He stared hard at the tiny engraving, trying to imagine it was real.

"Open," he said. He looked at Ron, who shook his head. "English," he said.

Harry looked back at the computer, willing himself to believe it was AGI.

"Open," he said.

Except that words weren't what he heard; a strange set of beeps escaped him, and at once, the computer glowed bright with the blue screen of death and began to spin. It sank right out of sight, leaving a large pipe exposed, wide enough for a man to slide into.

"I'm going down there," he said…

Harry Potter is one of my all-time favorite series. I read the Philosopher's stone and Chamber of Secrets only well after they were released. But, for every book that followed, I biked feverishly to the nearest bookstore and camped outside the building early in the morning. I wanted to be the first to read the next book and didn't want my friends to spoil the plot for me (and try they did).

The Marauder's map is one of the most incredible inventions in the series. It allows the holder to see the entirety of Hogwarts and where every character is in the fabled castle. With the map in hand, Harry and his friends weave in and out of tunnels and secret passageways, dodging pesky professors, ghosts, and foes as they embark on their adventures (Thank you, Messrs Moony, Prongs, Padfoot and Wormtail).

Just as the map allows Harry to see everything clearly and in context, machine learning models use a map of similar power to see data in context.

These are called embeddings!

Let's journey into the chamber of embeddings and unlock its mysteries.

What's an embedding?

Computers speak in binary. They see the world in 1s and 0s. If we showed a computer a set of words, it wouldn’t have a clue what they are. So, we need a way to tell the computer what each word is. But couldn't we just assign a number to each word in the dictionary and be done with it? We could, but the results suck (Brilliant people tried this!).

The simple reason is that you don't get any context if you randomly assign numbers to words. It's an arbitrary assignment. For example, look at the two sentences below. They use similar words, but the ordering of the words changes the meaning. Using random numbers, computers can't get that context or capture the relationship between words.

Another approach researchers have tried with some success is called one-hot-encoding. Here, we represent each word as a vector of ones and zeros. To one-hot encode a word, we simply create a vector of all zeros that is as long as the number of words in our vocabulary. The vector for a word has zeros everywhere except at the index corresponding to that word.

In the example above, we have four words. Thus the length of each vector is four. The encoding for each word has a "1" at the index corresponding to that word. To encode a sentence, we can concatenate the one-hot representations of each word.

However, this approach has two major limitations. First, each time we add a new word to the vocabulary, we need to increase the length of the vectors and redo the encoding process. This is tedious and wastes a lot of memory since the one-hot representation is sparse (99.9% of the values in a one-hot vector are zero!). Imagine that your vocabulary has ten million words, and you need to add a new word. Second, one-hot encoding doesn't place similar words closer together. Therefore, we are deprived of context!

So what's the solution? Enter embeddings.

Embeddings are also a way to represent words as numbers. However, they are much better than the simplistic representations we've seen so far.

Embeddings are vectors too, but unlike one-hot vectors, they are dense and packed with information. In fact, embeddings are a vector of floating point numbers that are learned, not assigned!

Why does this matter? A few reasons, actually. Since embeddings are learned, we don't need to design them by hand. This reduces tedium significantly. Also, we don't need to change the length of the vectors (a.k.a word vectors/embeddings/big kahuna) each time we ingest a new set of words into the vocabulary. So, the vectors can be of a fixed length, which is far more scalable than the one-hot approach. Finally, these vectors are learned by feeding machine learning models tons and tons of data. They see where a given word appears in various sentences and with which other words. Thus, these models see words in context.

Let's dive into this a bit more.

What's in a word?

"You shall know a word by the company it keeps." ~ John Rupert Firth

The quote above summarizes everything you need to know about creating embeddings. To learn embeddings, we take a large corpus of text and train a model to predict something based on that text. For example, we could feed movie reviews to a model and ask it to guess the sentiment of these reviews. We could instead ask it to guess the most likely words that can surround a given word in a sentence or guess a word given its surrounding words. The latter approach is what Google used to train their famous Word2Vec (Word to Vector) model way back in 2013. Essentially, we trick the model into thinking it's learning to solve a task we care about. However, the real gold is in the model's weights (trainable parameters).

Once the model has been trained, these learned weights position similar words closer together in the embedding space. But, conversely, dissimilar words are pushed farther apart. How does this happen?

What do embeddings look like?

Recall that embeddings are just vectors of floating point numbers. We can pull out some graph paper and visualize these as points on the graph. During training, the model tweaks the individual numbers in each vector so that vectors corresponding to two contextually dissimilar words are pulled far away from each other. Similarly, vectors that correspond to contextually similar words are pushed together. That's literally it!

One thing we still need to talk about is what the numbers in each vector are in the first place. These numbers are measures of various attributes. For example, if we looked at the word ball, it would score high on the roundness attribute but low on the height attribute. Likewise, a cake would score high on sweetness but low on strength. Each number (attribute) in a vector is tweaked so that collective values in the vector represent the word. This allows the model to push and pull words based on their similarity. The critical thing to note here is that we don't tell the model what these attributes are. Instead, it learns the best features from the data we provide.

I know this is a lot to take in. So, to illustrate all of this, I trained a Word2Vec model on the Harry Potter series. Here are some popular characters from the series plotted as points in the embedding space. Note: I had to reduce each embedding from 10,000 values (the length of my embedding vectors) to two, so I could visualize them. If you know of a way to visualize 10,000 dimensions, let me know 😀.

We can see that characters frequently appearing together in the books are closer together in the embedding space. There're some interesting nuances here. Take a look at the point representing Snape and the point representing Severus. Why do we see two points for the same person? While processing the corpus, I broke sentences into individual words before allowing the model to learn embeddings from them. Thus, Severus Snape becomes Severus and Snape. Also, throughout the series, certain characters refer to him as Snape (Hagrid, Harry, Ron, etc.) while others call him by his first name, Severus (Dumbledore, McGonagall, etc.). See the positions of Severus and Snape with respect to these characters. Cool right?

But embeddings don't stop at just putting frequently co-occurring words closer together. They can also learn characteristics (provided the corpus is large enough). Look at the figure below. I plotted words representing qualities, traits, and symbolic objects like power, kind, evil, death, horcrux, etc. I also plotted Dumbledore and Voldemort. Look at how close the negative words are to Voldemort. Similarly, positive words are more proximate to Dumbledore. Note that evil and kind are far apart too. That's how awesome embeddings are.

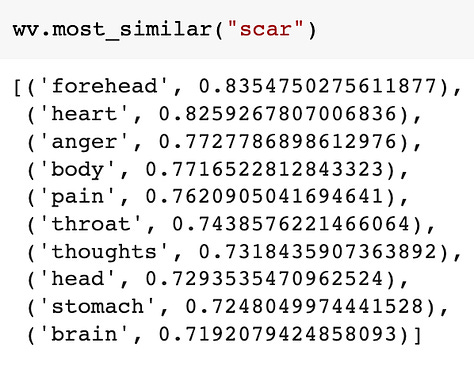

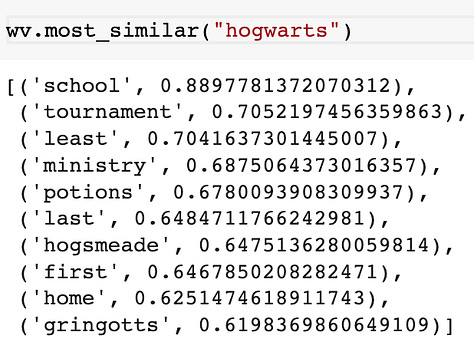

To drive this point home, look at the following images. I asked my Word2Vec model to return the closest words for a given word. The results are fascinating!

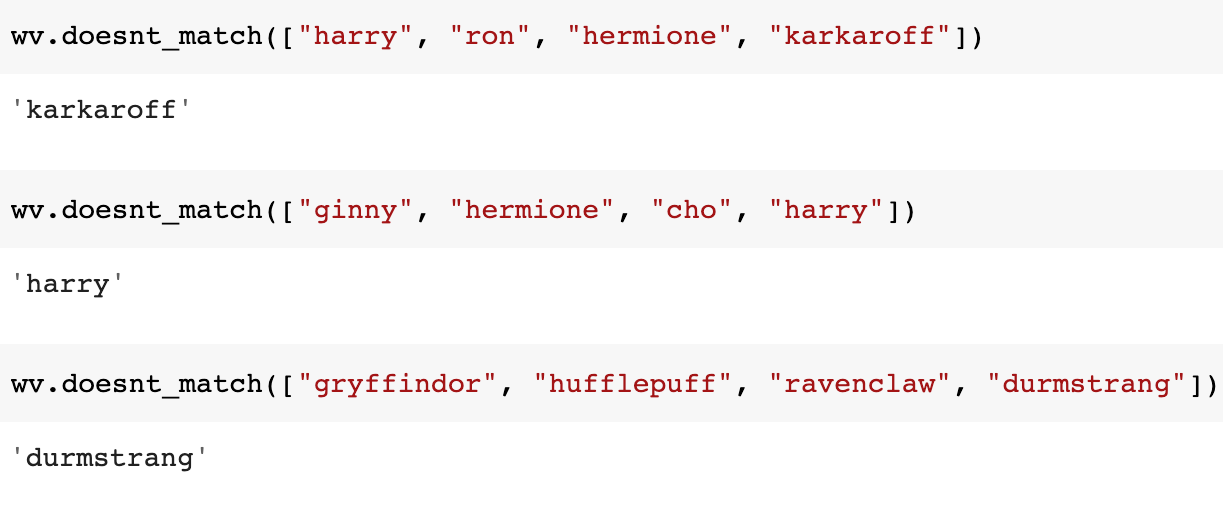

James Potter was my favorite result (Hit me right in the feels). You can also use embeddings to spot oddballs from a list of items. Look at these results.

Word similarity and arithmetic

The next question we need to answer is how we measure the similarity between two words (vectors). I'll have to draw in some math here, so apologies in advance. Dust off your high school math textbook and turn to the chapter on trigonometry. The cosine of an angle is the ratio of the adjacent side of a right-angled triangle to its hypotenuse. The cosine of 0 degrees is 1. The cosine of 90 degrees is 0.

I agree. This definition is absolutely useless to you. But, it turns out that the cosine can be used to measure the similarity between two vectors. Here's how. The keen-eyed observer that you are, you would have immediately noticed that the cosine of an angle decreases as the angle increases.

So, the cosine value decreases if the angle between two vectors increases. If two words are similar, their word vectors will have a smaller angle between them. Thus, the cosine value of this angle will be high. Conversely, if two word vectors are dissimilar, the angle between them will be larger, and the cosine value will be smaller. This measure is called the cosine similarity metric. Bingo!

I'm sure you're itching to ask, "Hang on. You've just plotted some points so far. Where do lines come in the picture?".

Easy. In the plots above, connect each point with the origin (0,0), and you have lines. While this is a very simplistic view of things, it will suffice for our purposes. Look at this example below:

The Nimbus and Firebolt are both racing brooms and thus will have a smaller angle between them. This would lead to a higher cosine value. However, if we compare Dumbledore and Voldemort, they'll have a larger angle between them and, thus, a lower cosine value.

In some cases, we can also do arithmetic with words! For example, the words king, man, queen, and woman will appear clustered together in the embedding space. Thus the arithmetic operation "king - man + woman" yields the result "queen". Don't believe me? I ran this equation on a pretrained Word2Vec model. Look at the result below.

Where are embeddings used?

All of this is super impressive, but the real proof of the pudding lies in practical applicability. Embeddings power so many things we use daily. While we've discussed how text gets converted to embeddings above, you can create embeddings for just about anything - Music, images, categories, and much more. Here are some of the popular ones:

Search & chatbots

You'll be hard-pressed to find any language model that doesn't use embeddings in some way. These models power search - searching for things (like Google or Bing), searching within documents, searching for your favorite song, image-based search, etc. They also power chatbots like ChatGPT.

Generative AI

Models like DaLL-E, Stable diffusion, and Midjourney also take prompts from us users and produce mind-blowing imagery. So how do they know what to do from cryptic prompts? They use a bunch of embeddings (both text and image) under the hood to make it possible.

E-commerce & music streaming

Recommendation systems that power Amazon's online store or Spotify also use embeddings. Let's say you love to listen to the Eurodance group Eiffel 65 (I'm blue da ba dee…). You'd probably also listen to Aqua and Vengaboys (don't ask how I know this). How does Spotify find this out? Every user has an embedding associated with them. That means you have one too! This embedding is updated based on the songs you listen to. Spotify might use this to put you next to other users who listen to similar music. It also has another embedding table with embeddings for each song, and similar songs are closer together. Using these pieces of information, it can recommend new songs for you.

There are many other uses of embeddings that I've not listed above. They are like swiss-army knives, versatile and powerful. Just as expelliarmus is Harry's favorite spell, embeddings are my favorite ML tool. After this, I'm sure they'll be yours too!

Resources to learn more

Google's video lecture on embeddings

If you learn best by watching videos, here's a great video from one of Google's engineers on embeddings from their crash course on machine learning. In fact, I'd recommend going through the entire crash course.

Link: https://developers.google.com/machine-learning/crash-course/embeddings/video-lecture

A chapter on embeddings from "Speech and Language Processing"

If you're more of the learn-by-reading kind, I've got you covered. This chapter from the book "Speech and Language Processing" by Jurafsky et. al covers embeddings in rigorous detail.

Link: https://web.stanford.edu/~jurafsky/slp3/6.pdf

A Tutorial on building your own embeddings

If you love to learn by building, here's a tutorial on Kaggle that teaches you how to create your own embeddings using Word2Vec.

Link: https://www.kaggle.com/competitions/word2vec-nlp-tutorial/overview/description

I love how you tied Harry Potter into all this, and you make it look so easy. This is a fun read, Sairam!

And, yes, in fact back in the day, I listened to Eiffel 65 (really just their number one hit) and Aqua. Although, I must confess I haven't heard of Vengaboys. I appreciate the recommendation, though. 😆

What a brilliant article. I feel I understand embeddings now!