OpenAI's Build Hour and Andrew Ng's Multi-Agent Masterclass - The Tokenizer Edition #4

This week's most valuable AI resources

Hey there! OpenAI walked through their new agent SDK, Andrew Ng broke down multi-agent architectures, and medical AI showed some promising results when trained on domain-specific data. Turns out models adapted for medical imaging can outperform general-purpose ones on certain benchmarks. Progress, if not revolution.

New here?

The Tokenizer is my resource-focused newsletter edition where I curate the best papers, videos, articles, tools, and learning resources from across the AI landscape. Consider it your weekly dose of everything you need to stay ahead in machine learning.

TL;DR

What caught my attention this week:

• 📄 Papers: Medical foundation models that outperform specialized systems, portrait animation with dual reference controls, and surprising insights about critic models as policy models

• 🎥 Videos: OpenAI's latest on building production agents, NVIDIA's physics-based audio tech, and practical guidance on word vector semantics

• 📰 Reads: Deep dive into Mixture-of-Experts architectures, the truth about LLM hallucinations from OpenAI, and what makes Claude Code exceptional

• 🛠 Tools: Comprehensive agentic AI learning resources and next-generation code repositories for production deployment

• 🎓 Learning: Andrew Ng's frameworks for architecting multi-agent systems in real-world applications

📄 5 Papers

MedDINOv3: How to adapt vision foundation models for medical image segmentation?

https://arxiv.org/abs/2509.02379 | GitHub

A vision foundation model that works effectively on medical images and outperforms specialized medical systems on several benchmarks. MedDINOv3 adapts DINOv3 to medical segmentation through domain-adaptive pretraining on 3.87M CT slices, showing strong results across four segmentation benchmarks. The key breakthrough is multi-scale token aggregation combined with a multi-stage training recipe that helps bridge the domain gap between natural and medical images.

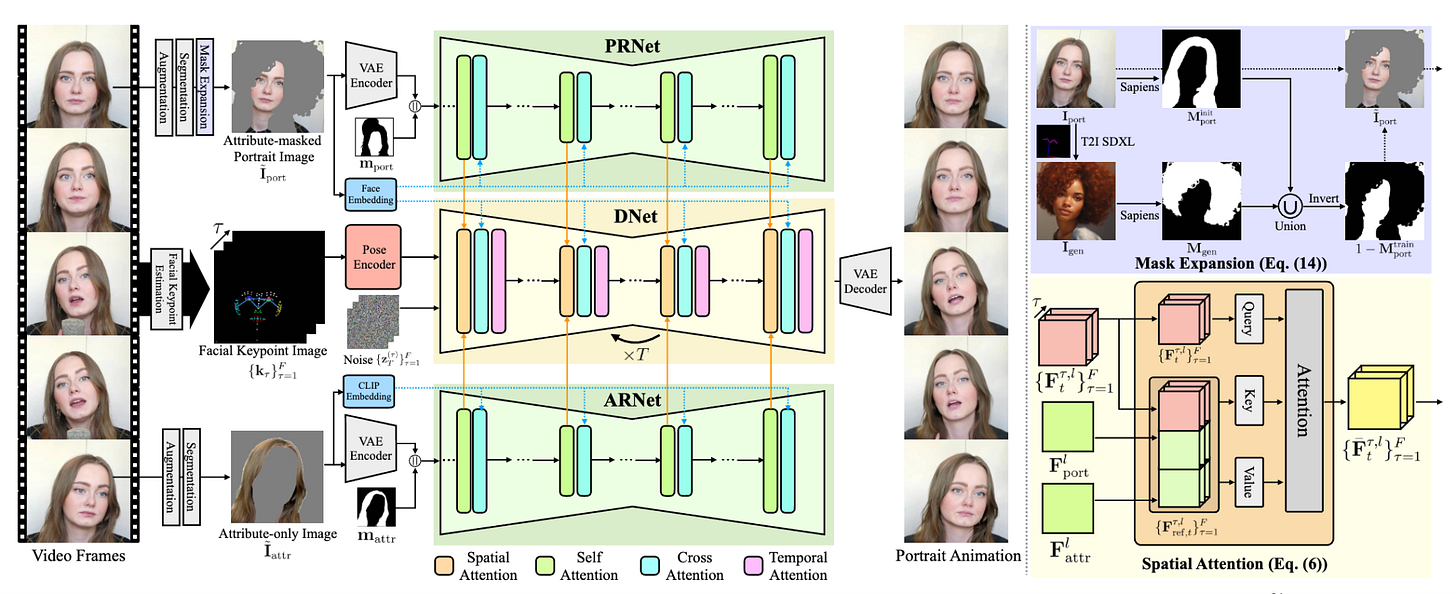

Durian: Dual Reference-guided Portrait Animation with Attribute Transfer

https://arxiv.org/abs/2509.04434 | GitHub

Portrait animation gets a thoughtful upgrade with Durian's dual reference networks that inject spatial features from both portrait and attribute images into the diffusion process, enabling facial attribute transfer without retraining. Unlike previous methods that struggle with attribute consistency across frames, Durian's self-reconstruction training and mask expansion strategy allows multi-attribute composition in a single generation pass. The temporal consistency holds up well, even with complex attribute combinations.

LLaVA-Critic-R1: Your Critic Model is Secretly a Strong Policy Model

https://arxiv.org/abs/2509.00676 | GitHub

Here's an interesting finding: critic models trained to evaluate outputs can also serve as capable policy models for generation. LLaVA-Critic-R1 demonstrates this through reinforcement learning on preference data, where the model works as both a critic and a policy model that performs well across 26 benchmarks, with a 5.7% improvement over its base model. The research suggests we might be able to unify evaluation and generation in a single model.

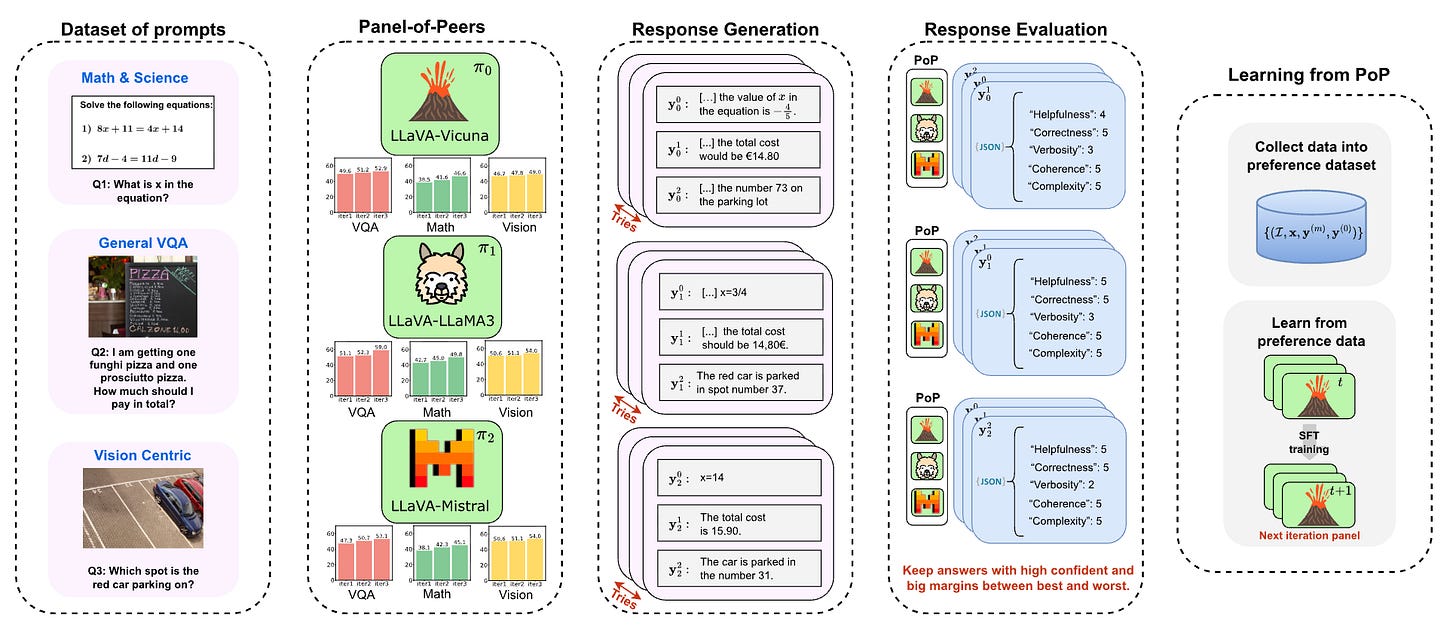

Improving Large Vision and Language Models by Learning from a Panel of Peers

https://arxiv.org/abs/2509.01610

Human feedback gets some competition from peer review approaches. This Panel-of-Peers framework simulates collaborative learning where multiple LVLMs evaluate and learn from their collective outputs through iterative self-improvement. By simulating a classroom environment where models generate, assess, and refine responses, the approach increases average performance from 48% to 57% across fifteen benchmarks without requiring extensive human-labeled datasets.

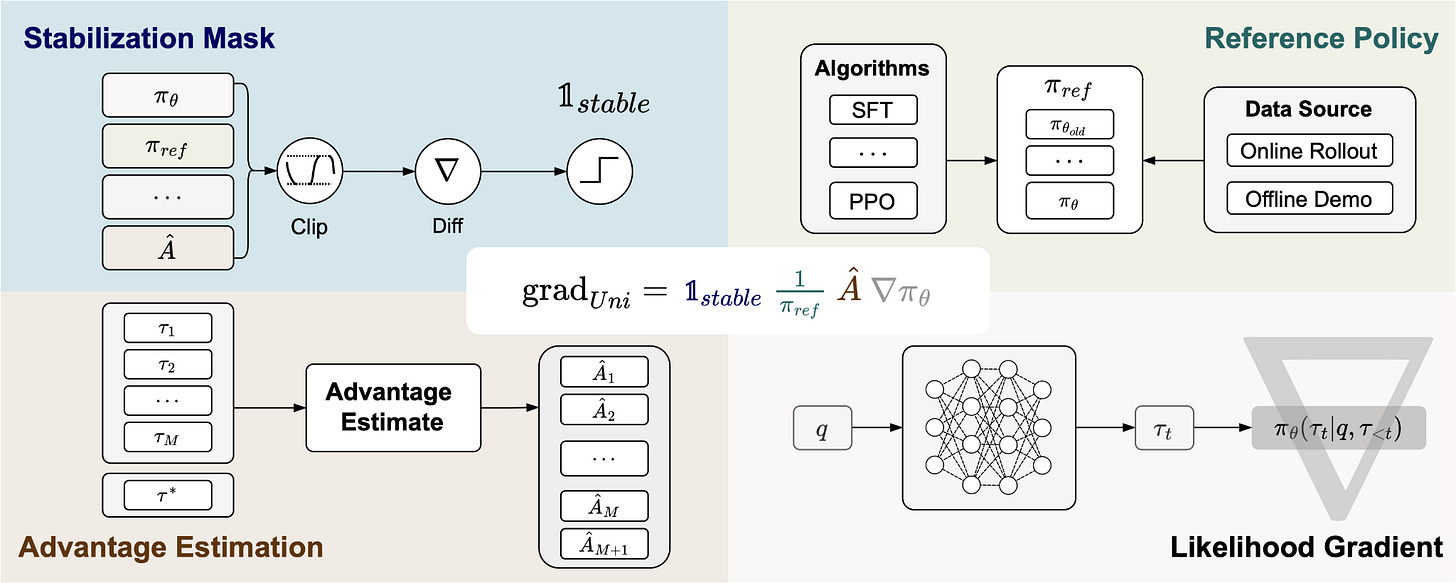

Towards a Unified View of Large Language Model Post-Training

https://arxiv.org/abs/2509.04419 | GitHub

Post-training gets some theoretical grounding with this work that derives a Unified Policy Gradient Estimator showing reinforcement learning and supervised fine-tuning are instances of the same optimization process under different data assumptions. The proposed Hybrid Post-Training (HPT) algorithm dynamically selects training signals to balance demonstration exploitation with stable exploration. HPT consistently outperforms baselines across mathematical reasoning benchmarks.

🎥 4 Videos

Build Hour: Agentic Tool Calling

OpenAI's technical team walks through their latest Agents SDK and Responses API in this hands-on session. You'll learn about chain-of-thought tool calling patterns, the unified interface for defining agent behaviors, and practical approaches for building production-ready agents. The session includes real integration examples from companies like Coinbase and Box.

NVIDIA's Tech: The Physics Engine That Fooled Everyone's Ears!

Károly Zsolnai-Fehér from Two Minute Papers covers NVIDIA's advances in physics-based audio simulation. The technology generates realistic audio from physical interactions in real-time, creating sound effects that are difficult to distinguish from recordings. This represents notable progress in procedural audio generation with applications in gaming, virtual reality, and content creation.

How word vectors encode meaning

3Blue1Brown explains how word embeddings capture semantic relationships in this concise video. Grant breaks down the mathematical intuition behind vector space representations of language, making complex concepts accessible without losing important details. Useful if you work with language models and want to understand the underlying mechanics.

Deep learning for computer vision

https://youtube.com/playlist?list=PLoROMvodv4rOmsNzYBMe0gJY2XS8AQg16

Stanford's gold standard computer vision course covers everything from convolutional networks to modern transformer architectures. The 2025 playlist includes lectures on image classification, object detection, segmentation, and recent advances in vision-language models. Helpful for building foundational knowledge or refreshing your understanding of computer vision fundamentals. Technically, this isn’t one video, but given this is the first time the playlist has been updated since 2017, I had to share this :).

📰 3 Curated Reads

NanoMoE from Scratch

Cameron R. Wolfe, Ph.D. delivers a thorough deep dive into Mixture-of-Experts architectures by building nanoMoE from scratch in PyTorch. The post covers everything from router mechanisms to auxiliary losses, providing both theoretical understanding and practical implementation details. With frontier models like DeepSeek-R1 using MoE architectures, this guide helps you understand the technical foundations behind these systems.

Why LLMs Hallucinate

https://openai.com/index/why-language-models-hallucinate/

OpenAI addresses hallucination causes and mitigation strategies in this technical yet accessible post. The piece explores why language models generate false information, from training data limitations to architectural constraints. It also provides practical approaches for reducing hallucinations in production systems.

What makes Claude code so damn good?

https://minusx.ai/blog/decoding-claude-code/

Vivek Aithal analyzes what sets Claude Code apart from other AI coding assistants. The piece examines Claude's approach to context understanding, code generation patterns, and debugging capabilities. The insights help explain why Claude produces reliable and maintainable code, making it useful reading for developers considering AI-assisted coding workflows.

🛠 2 Tools & Repos

Agentic AI Crash Course

Aishwarya Naresh Reganti and Kiriti Badam have created a comprehensive free course on agentic AI. The curriculum covers fundamental concepts through multi-agent architectures, with practical exercises and real-world case studies. Whether you're a PM trying to understand agent capabilities or an engineer building production systems, this course provides structured learning materials.

DeepCode: Open Agentic Coding

https://github.com/HKUDS/DeepCode

An open-source framework for building coding agents that can handle complex software development tasks. DeepCode provides the infrastructure for creating AI systems that can understand codebases, generate solutions, and iterate on implementations. The project offers a practical starting point for teams looking to build custom coding assistants or integrate agentic programming capabilities into their workflows.

🎓 1 Pick of the Week

Architecting Multi Agent Systems

Andrew Ng discusses frameworks for designing multi-agent systems that work in production environments. The session covers coordination patterns, handoff mechanisms, and scaling considerations for enterprise deployments. He focuses on architectural decisions that affect success when moving from single-agent prototypes to multi-agent production systems.

Thanks for reading The Tokenizer! If you found something useful here, share it with someone who might benefit. And if you want more curated insights like this, consider subscribing to Gradient Ascent.