It's been a crazy week, folks. A few quick updates before this week's goodies.

Beta Reading

Beta reading for AI for the Rest of Us has started! The first couple of chapters went out to a handful of beta readers. I'm nervous to hear what they have to say. At the same time, I'm excited about incorporating their feedback.

If you signed up to help and haven't yet received an email from me, don't worry. I've partitioned chapters evenly across all the folks who signed up, so I'm definitely counting on you. As I mentioned last week, the chapters will go out in a drip-feed fashion over the course of the next few weeks. This will allow me to balance my editing schedule. Thanks for your patience.

The Book

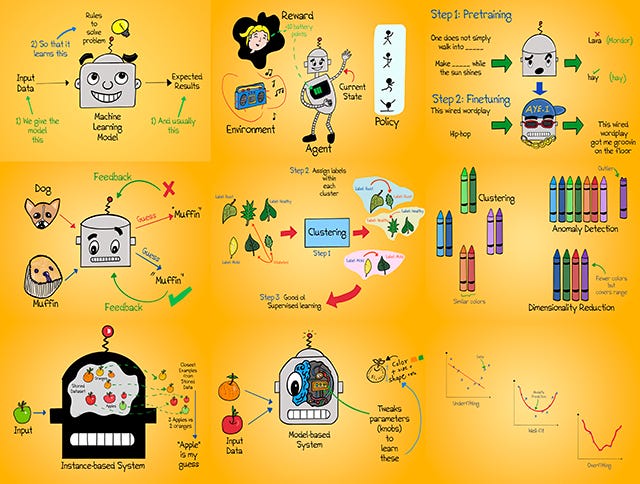

Illustrations for the book are in full swing. I've never drawn this much in my life, and I'm loving it. Here's a small collage of some of the illustrations I've made for the book. What do you think?

Deep Dives

That brings me to the next update. Given that the book's release date is fast approaching, I'll be sharing deep dive articles in a bi-weekly cadence1. The learning resources, however, will keep coming your way every week. I'll follow this cadence until the book is launched. With a 9-5, family commitments, and a book draft, I don't want to spread myself too thin. Thanks for understanding.

Feedback

Also, thanks to the many readers who've left feedback on what they'd like to see in upcoming editions. I'll be sure to plan my topics to cover what you'd like to see. Have feedback? Use the form at the end of each edition. It takes less than a minute 🙂.

We're near the end of the image-generating AI series. We've seen Autoencoders, Variational Autoencoders, and Generative Adversarial Networks. The next few deep dives will cover diffusion models and how they produce mindblowing imagery. To whet your appetites, here are a few resources that cover the spectrum of diffusion model tutorials. Whether you like equations, code, video, or plain English, there's something in here for you. Let's go!

This Week on Gradient Ascent:

[Consider reading] A comprehensive article on diffusion models 📚

[Check out] The annotated diffusion model 🧑💻

[Watch] A video explainer for text-to-image models 📽️

[Try] Searching for visual prompts 🔤🎨

[Definitely Check out] Comprehensive AI art tool & resource chest 🧰

Resources To Consider:

What are Diffusion Models?

Link: https://lilianweng.github.io/posts/2021-07-11-diffusion-models/

Lilian Weng leads applied research at OpenAI. Her blog post on diffusion models is rigorous and very comprehensive. I will warn you that it is math-heavy. Consider reading it if you want to understand the nuts and bolts of these models from a mathematical perspective.

The Annotated Diffusion Model

Link: https://huggingface.co/blog/annotated-diffusion

Prefer code over math? This blog post from HuggingFace covers the original diffusion model paper line-by-line in code. It's a great way to see how math and code correlate, and if you're a code-first learner, the perfect place to start.

A Simple Video Explainer for Text-to-Image Models

This video from Vox is a lovely high-level explainer behind the intuition of how modern text-to-image models work. It's narrated clearly, and the animations help a ton. Worth watching regardless of your experience level.

Prompt Dictionary

Link: https://lexica.art

AI-generated imagery has jumped up a few levels, and the prompts that produce them are highly coveted. If you're wondering what the prompt was that produced an image you love, check out Lexica. It's a prompt dictionary that allows you to search for an image and then look at the prompt that was used to create it. Note that it only searches over DaLLE creations, as far as I can tell.

AI Art Tools & Resources

Link: https://pharmapsychotic.com/tools.html

This gold mine has a vast collection of resources and tools for AI art generation. Definitely explore this site. It's got tons of goodies inside for all kinds of generative AI, not just images.

So, the next deep dive will come to your inbox next week. The one after that will be two weeks from that one, and so on. I’ve seen some folks use bi-weekly as “twice a week” and some others use it as “once in two weeks”. I mean the latter here. :)