The Last Mile is Always Human

What's next for Gradient Ascent and the 2025 recap.

We are at a crossroads.

The “wow” phase is over. For two years, generative AI felt like witnessing a magic trick on repeat. Chatbots that could write poetry. Models that conjured images from words. Agents that promised to automate everything we found tedious. The demos were intoxicating. The possibilities felt infinite.

Now comes the harder question: What do we actually do with all of this?

Something has shifted. The noise-to-signal ratio has exploded. LinkedIn feeds overflow with AI-generated thought leadership that says nothing. Inboxes fill with synthetic outreach that fools no one. The tools that promised clarity have produced a fog. And somewhere in that fog, the things worth reading, worth learning, worth building have become harder to find.

I started Gradient Ascent because I believe complex ideas deserve to be understood, not just consumed. I believe that a hand-drawn diagram can unlock an insight that ten thousand words cannot. I believe that the struggle to understand something deeply is not an obstacle to learning.

It is learning.

But I cannot build this newsletter in a vacuum. Not anymore. Not at this crossroads.

I need to know where you are on your climb. What concepts remain fuzzy? What keeps you up at night? What would actually help you build better systems, lead better teams, and think more clearly about the technology reshaping our world?

As a token of thanks, on completing the survey, you’ll receive a curated resource pack that has the best FREE courses, repos, and other learning resources on various topics, including LLMs, Agents, RAG, Vision, RL, and more. (Within 2 business days)

Your answers will directly shape what Gradient Ascent becomes next. The topics I cover. The depth I go to. The formats I experiment with. This is not a formality. I will read every response.

Before I share where we are headed, let me tell you where we have been, and what I have been wrestling with along the way.

Where We Have Been

This past year, the Gradient Ascent community grew from 8k readers to 25k readers.

We had 15 curation pieces and a handful of deeper essays. The most popular editions, “Claude Code is not a tool” and “The secrets of distributed training”, sparked conversations that continued for weeks.

But the numbers only tell part of the story.

The truth is, most of my creative energy this year went somewhere else: finishing and launching AI for the Rest of Us.

Writing a book, especially one built around hand-drawn illustrations, is a different beast than writing a newsletter. It demands sustained focus across months, not the weekly rhythm of shipping editions. It forces you to think in chapters, not posts. It consumed me in the best and most exhausting way.

That is why The Tokenizer, my curated weekly roundup, became the workhorse of Gradient Ascent this year. It was the edition I could sustain while wrestling a book manuscript into shape in between a full-time role and family commitments.

I don’t regret that trade-off. The book exists today in the real-world. It is helping people who will never read a technical paper understand what is actually happening inside these systems. That matters to me deeply.

But I also felt the absence of something.

The deep-dive visual explainers. The pieces that force me to truly understand a concept before I can draw and write about it. The work that sticks in your brain months after you read it.

That is what I want to return to.

And during the months I spent away from that work, I found myself thinking constantly about why it matters.

The Future of Learning

While writing the book, and in the months since, I have been wrestling with a question that will not let me go:

What is the future of learning in an age of infinite generated content?

This is not an abstract question for me. It is existential. I write a newsletter. I draw illustrations to explain complex ideas. I believe deeply in education as transformation, not transaction. And I am watching the very foundations of that belief get tested.

I have spent months reading, listening, and talking to creators across disciplines. Educators rethinking what a classroom means when every student has a tireless tutor in their pocket. Artists questioning what it means to make something when a machine can generate a thousand variations in seconds. Writers wondering whether the craft of prose still matters when text can be produced on demand.

The conversations have been fascinating and unsettling in equal measure. And they have crystallized something for me about what this newsletter needs to be, and what it must resist becoming.

Because here is what I have come to believe: we are facing a cognition problem and not just a content problem. And if we don’t name it clearly, we can’t fight it.

The Quiet Erosion

Here is what I have been thinking about while planning that next chapter.

We are in the middle of something I call the Quiet Erosion. It isn’t dramatic. It doesn’t announce itself. It happens one small convenience at a time.

The student prompts an AI to write their essay. They get the grade. But they never learned to structure an argument, to wrestle with ambiguity, to find their own voice. The output exists. The skill does not.

The engineer pastes AI-generated code into their system. It runs. But they cannot fully explain what it does. They have introduced a black box into their own creation. When it breaks at 2 AM, they will stare at it like a stranger.

The executive skims an AI summary of a strategy document. They learn the bullet points but miss the subtext. They know the what but not the why. And when the decision demands nuance, they reach for a foundation that never existed.

This is cognitive offloading, and it is subtle. Charlie Gedeon calls it “intellectual deskilling”: letting the copilot become the autopilot. Anthropic’s own research found that 47% of early student AI use was purely transactional. Students wanted the answer, not the understanding. The output existed, but the user gained nothing. Call it atrophy or deskilling. The risk is real.

Think about what happens when you break your arm. The cast immobilizes it, protects it, does the work of holding everything in place while you heal. But when the cast comes off six weeks later, the arm underneath has withered. The muscles have atrophied. The joint is stiff. The very thing that protected you also weakened you, because the arm was never asked to do its job.

That is what we are doing to our minds. Every task we offload is a rep we do not perform. Every answer we accept without struggle is a neural pathway we do not build. The AI holds our cognition in place, and we feel supported. But underneath the cast, we are quietly atrophying.

And while our individual cognition weakens, the collective information environment is rotting too.

The internet is filling with slop. Infinite summaries. Auto-generated code. Synthetic opinions. Content mills churning out articles optimized for algorithms, not humans.

Every platform is drowning in low-quality noise designed to capture attention, not to inform or illuminate. Finding signal in this environment is becoming genuinely difficult.

Finding truth is becoming harder still.

Kurzgesagt identified what they call the Circular Lie, and it haunts me. Here is how it works: an AI hallucinates a fact, and that ends up on the internet. It sounds confident, so another AI scrapes that content and cites it. A human researcher, trusting the citation, includes it in their work.

And just like that, a falsehood embeds itself in the knowledge base, passed off as truth, impossible to trace back to its hollow origin.

This is happening now. We are offloading cognition and, in the process, building cathedrals on quicksand. Worse yet, we are doing it while our ability to detect the quicksand atrophies from disuse.

There is something else too, something harder to measure but impossible to ignore. The erosion of wonder. We used to look at a stunning piece of art or an incredible video and feel awe. Now we look at it with suspicion. Is this real? Is this fake? Was this made by a human or generated by a machine? The default reaction has shifted from appreciation to skepticism.

By flooding us with content, AI has poisoned our ability to trust what we see.

This is the crisis I want Gradient Ascent to address. Not by ignoring AI. Not by pretending we can turn back the clock. But by insisting, stubbornly and deliberately, on the things that still require human struggle, human judgment, human understanding.

Which brings me to the core philosophy of learning.

No Friction, No Growth

Here is the uncomfortable truth about learning: it requires resistance.

Your brain does not build new pathways because you want to understand something. It builds them because you struggle to understand something. The difficulty, the obstacle, is the mechanism. When AI removes the friction, it removes the learning itself.

Think of it this way.

A helicopter can drop you at the summit of a mountain. You step out, take in the view, snap a photo. “Made it to the top!” you announce. But you are a tourist. You do not know the terrain. You could not navigate back down if you tried. The first unexpected storm will leave you stranded.

The climb is different. You walk the path yourself. You feel the incline. You learn which handholds hold and which crumble. You take wrong turns and backtrack. And when you finally reach the summit, you are not a tourist. You are a mountaineer. The knowledge lives in your body.

Both the tourist and the climber arrive at the summit. Only one arrives transformed.

This is what Gradient Ascent means.

I’m not here to helicopter you to the summit. I’m not here to hand you AI-generated summaries so you can skip the complex parts. I’ll help map the route, verify the path, point out where the footing gets tricky, and where the views are worth pausing for.

But you have to do the climbing.

And so do I.

The Pen as a Filter

Some readers of my book have asked me, “Why do you still draw everything by hand? AI can generate diagrams now. They’re getting better every day.”

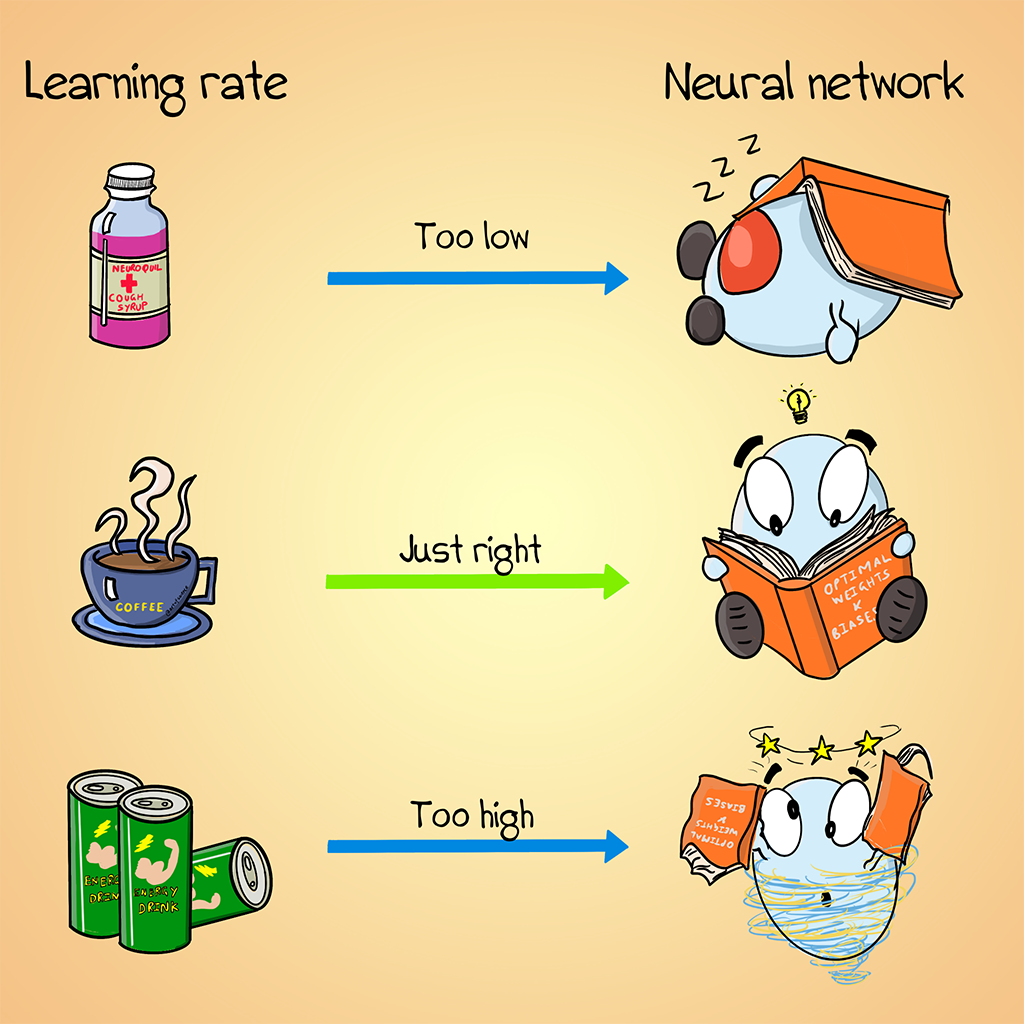

Yes, the models are impressive. Prompt the right words, and a technically polished neural network diagram appears in seconds.

But that observation misses the entire point.

I don’t draw to decorate. I draw to think.

When I sit down to illustrate a concept for my book or the newsletter, I’m not creating art (It would be a stretch to call it that, regardless). I’m running myself through a brutal compression algorithm. I take a 20-page research paper full of equations, jargon, and dense abstraction, and I force myself to strip away everything that is not essential. I search for the single visual metaphor that unlocks the whole thing. I can’t draw it until I understand it. And I can’t understand it until I have struggled with it.

That struggle is the product.

An AI can generate a diagram of a Transformer in seconds. It might even look clean. But it represents a statistical average, a pattern-matched guess assembled from every diagram in its training data. There is no understanding behind it. No filter. No point of view. No personalization.

When I draw, the resulting image is a map of my understanding, handed to you so you don’t have to get lost in the weeds.

The image is not the point. The thinking that produced the image is the point. And that thinking cannot and should not be outsourced.

The Line We Walk

I want to be clear about something. I’m not a Luddite. I’m not here to tell you AI is dangerous and you should reject it.

I use AI every day. I work in the field. I use it to write code, to debug, to automate tedious tasks, to accelerate research. I have compressed weeks of work into hours using these tools. I love efficiency. I love leverage. I love building things faster than I ever could before.

But there is a line.

I do not use AI to verify truth. I do not use it to replace judgment. I do not let it think for me on the things that matter.

AI is a power tool. And power tools are remarkable for the jobs they are designed for. But you would not use a circular saw to perform surgery. You should not use a language model to decide what is true or what is right.

This is what I mean by “the last mile is always human.” Every AI system, no matter how sophisticated, ends with a human decision. The human who chooses to trust the output or question it. The human who catches the hallucination. The human who knows when to override the recommendation. That human needs to understand what is happening beneath the surface.

Otherwise, they are not using the tool. The tool is using them.

Gradient Ascent isn’t here to help you “keep up” with AI news. A thousand newsletters already provide the daily churn of announcements and funding rounds.

This newsletter is for the thinkers and learners. Whether you lead a company, or a team of engineers or you are building your first agent alone in a Jupyter notebook, Gradient Ascent exists to be there with you on that journey. It exists to help you understand the fundamentals so you can wield these tools with precision, not just hype.

Transformers. Vision-language models. Agents. RAG. And anything that comes ahead. The whole stack, clarified and illustrated, so you can lead and build on solid ground.

In practice: I use AI for scaffolding (enumerations, boilerplate, code stubs), I verify truth in primary sources and triangulate across multiple reputable papers or docs, and I reserve human judgment for causality, ethics, and decisions with irreversible consequences.

The Promise and the Ask

Here is my commitment to you.

I will continue to do the work. I will read the papers, not just the abstracts. I will build the systems, not just describe them. I will verify sources and call out hype. I will struggle with the concepts until I can draw them clearly enough that they stick in your (and my) minds.

I will respect your time. If I do not have something genuinely useful to say, I will not fill your inbox with filler.

And I will keep climbing. This field moves fast. The terrain shifts constantly. But Gradient Ascent will keep mapping the route, honestly and rigorously, with the reader always in mind.

In 2026, I will start posting more of the deep visual explainers alongside the Tokenizer. For example, agents in production, VLMs and grounding, and system design for AI products.

If you value that kind of work, if you want a newsletter that treats you as a builder and not a bystander, I need your help shaping what comes next.

Your responses will determine the first three explainer topics, and I’ll publish the roadmap soon.

Five minutes. Your answers go directly into my planning. I will read every one.

Thank you for being on this climb with me.

The summit is not the point. The climbing is the point.

Life before death.

Strength before weakness.

Journey before destination.~ Brandon Sanderson

Happy New Year!

Sairam

P.S: Remember to grab your FREE resource pack

Hey Sairam, I'd kept the email for this issue unread in one of my many inboxes and finally got to reading it... I liked so many parts of it that I wanted to comment some of my faves here:

- The struggle to understand something deeply is not an obstacle to learning - it IS learning.

- Quiet Erosion... The output exists. The skill does not.

- Every task we offload is a rep we do not perform.

- The erosion of wonder: the default reaction has shifted from appreciation to scepticism.

- Both the tourist and the climber arrive at the summit. Only one arrives transformed.

Here's to a year of struggling to understand things! 🥂