The Tokenizer Edition #1 : What OpenAI's Co-Founder Really Thinks About AI Engineering

This week's most valuable AI resources

Hey there! I’ve got some interesting developments in mathematical reasoning and model architectures this week. Nothing earth-shattering, but the kind of steady progress that makes you realize we're solving harder problems than we were six months ago. Plus, OpenAI finally released some open weights (only took them five years).

New here?

The Tokenizer is my resource-focused newsletter edition where I curate the best papers, videos, articles, tools, and learning resources from across the AI landscape. Consider it your weekly dose of everything you need to stay ahead in machine learning.

Know someone who might like this? Share it with them here.

TL;DR

What caught my attention this week:

• 📄 Papers: Mathematical reasoning benchmarks that go beyond pattern matching, plus autoregressive models making a comeback

• 🎥 Videos: OpenAI's co-founder on what actually makes good AI engineers and practical insights on building AI-first applications

• 📰 Reads: Sebastian Raschka breaks down six years of transformer evolution with his usual thoroughness

• 🛠 Tools: Agent frameworks you can actually use in production without needing a research team

• 🎓 Learning: Master Claude Code workflows for real development projects

📄 5 Papers

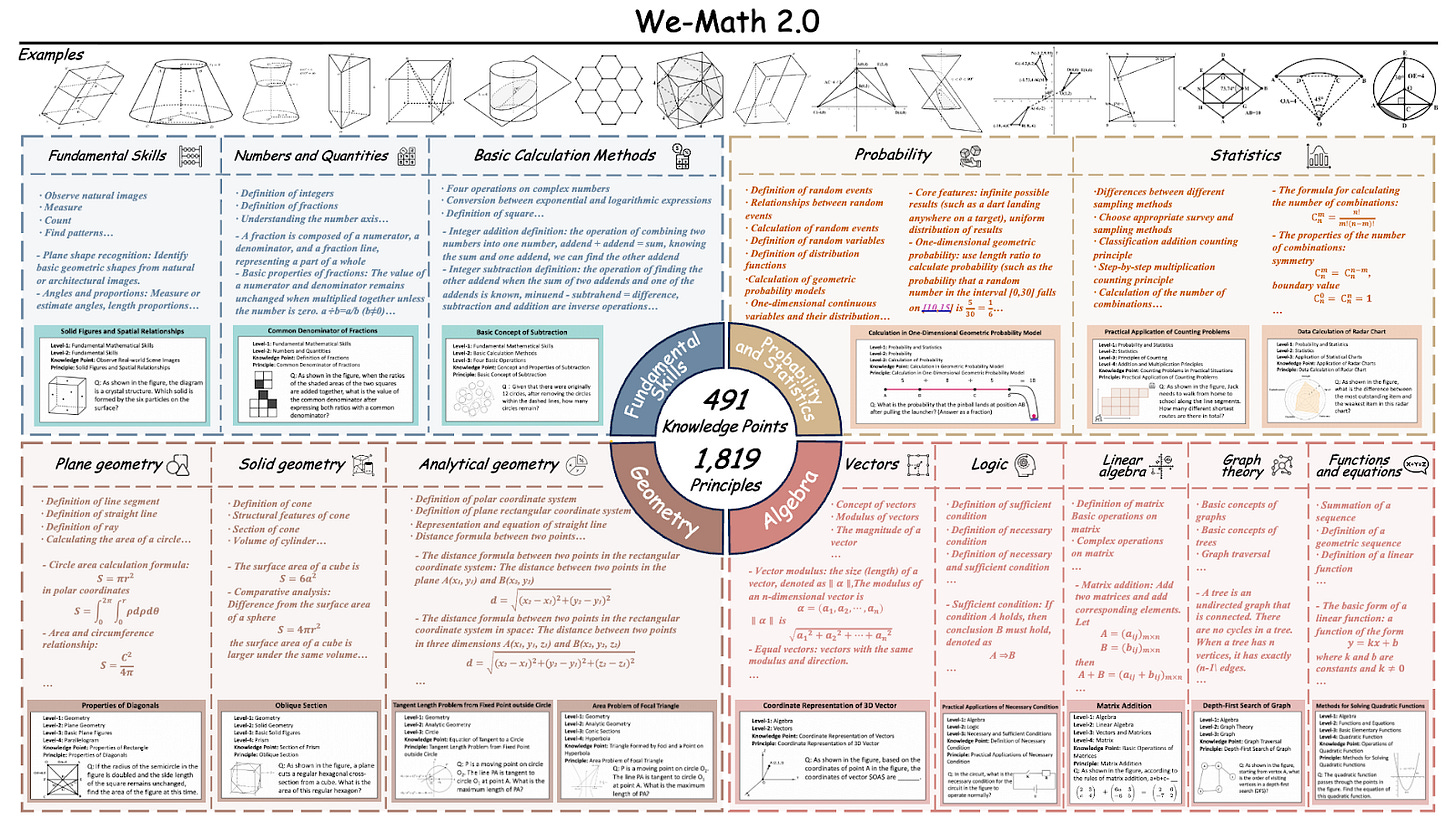

We-Math 2.0: A Versatile MathBook System for Incentivizing Visual Mathematical Reasoning

https://arxiv.org/abs/2508.10433 | GitHub

This research builds a structured mathematical knowledge system with 491 knowledge points and uses reinforcement learning to enhance multimodal mathematical reasoning. Instead of relying purely on pattern matching, the system decomposes complex problems into fundamental concepts before reconstructing solutions. The approach shows promise for building more systematic mathematical understanding in AI systems.

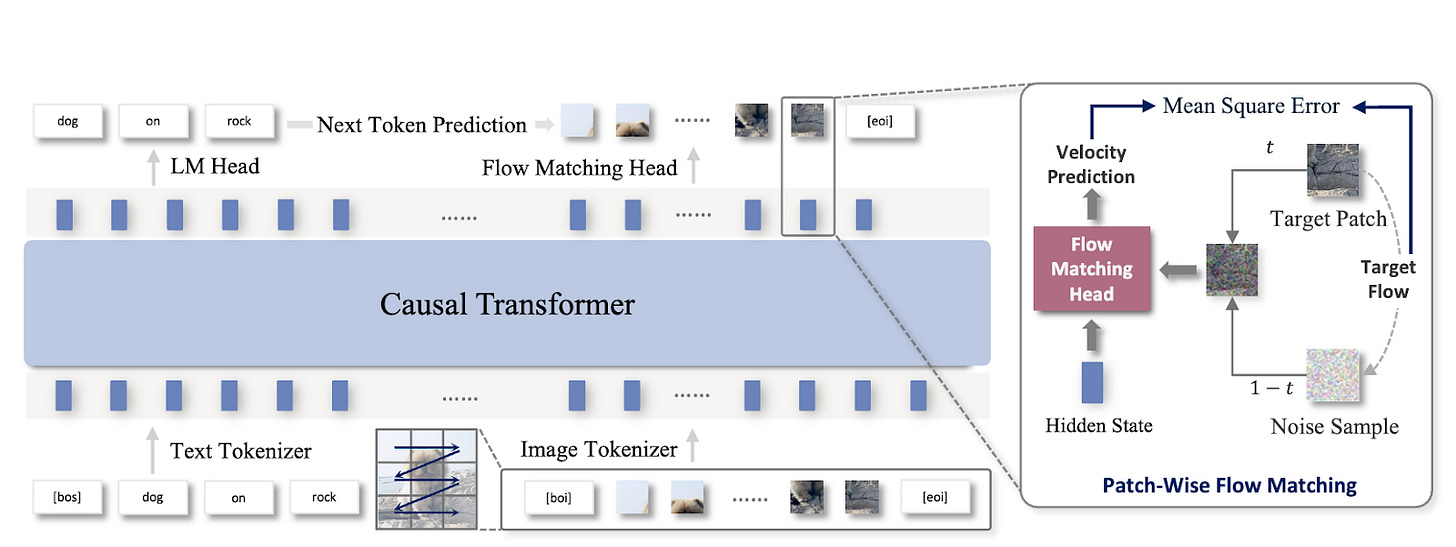

NextStep-1: Toward Autoregressive Image Generation with Continuous Tokens at Scale

https://arxiv.org/abs/2508.10711 | GitHub

A 14B parameter autoregressive model that works directly with continuous image tokens instead of relying on diffusion models or vector quantization. The approach uses a lightweight flow matching head while achieving competitive performance with better efficiency. This suggests autoregressive methods for image generation may be more viable than previously thought.

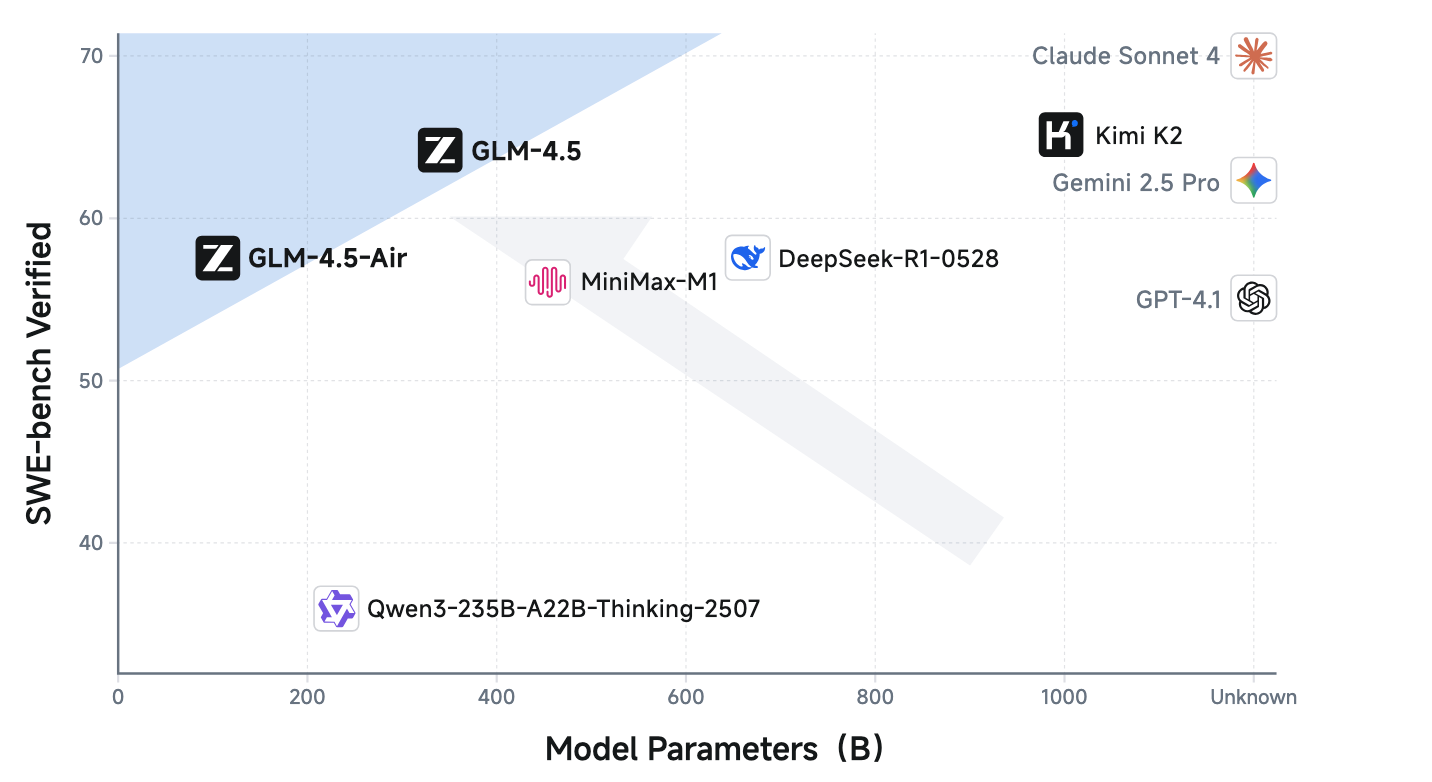

GLM-4.5: Agentic, Reasoning, and Coding (ARC) Foundation Models

https://arxiv.org/abs/2508.06471 | GitHub

A 355B parameter MoE model that unifies reasoning, coding, and agentic capabilities with hybrid reasoning modes. The model achieves a 90.6% tool calling success rate and can switch between deep thinking and direct response based on task complexity. Having these capabilities in an open-source model makes them accessible for research and practical applications.

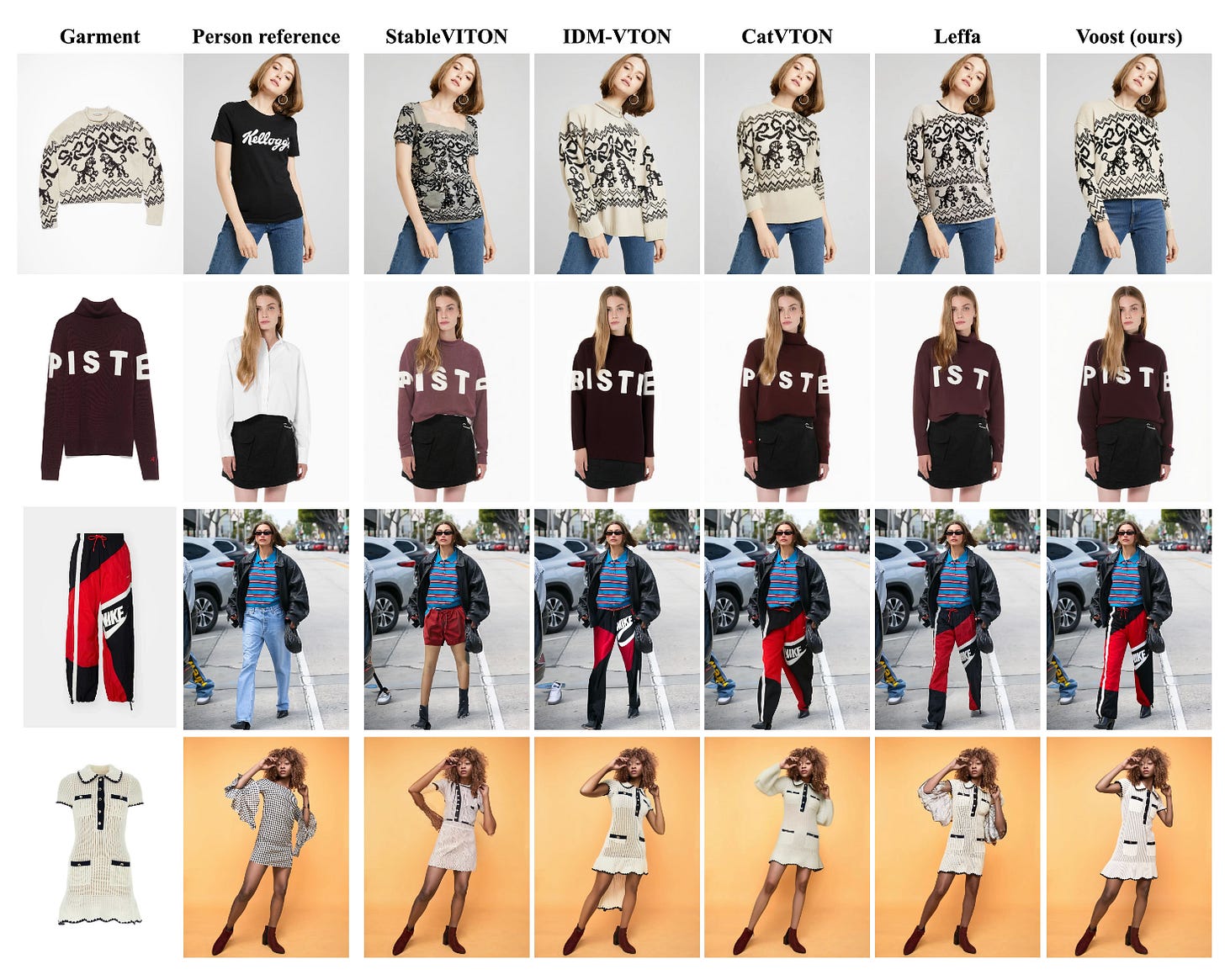

Voost: A Unified and Scalable Diffusion Transformer for Bidirectional Virtual Try-On and Try-Off

https://arxiv.org/abs/2508.04825 | GitHub

Instead of training separate models for virtual try-on and try-off, this research uses a single diffusion transformer that learns both tasks jointly. The bidirectional training improves garment-body correspondence by having each task supervise the other, addressing a persistent challenge in virtual clothing applications.

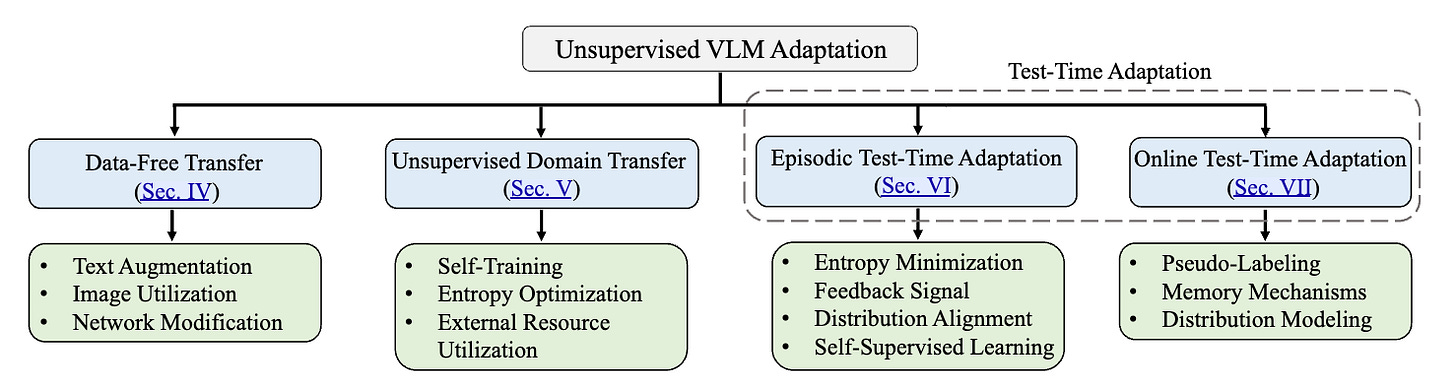

Adapting Vision-Language Models Without Labels: A Comprehensive Survey

https://arxiv.org/abs/2508.05547 | GitHub

A comprehensive survey organizing unsupervised adaptation methods for vision-language models into four paradigms based on data availability. The taxonomy covers everything from data-free transfer to online test-time adaptation, providing a useful framework for practitioners working on domain adaptation without labeled datasets.

🎥 4 Videos

What Makes an Effective AI Engineer

OpenAI co-founder Greg Brockman discusses what separates good AI engineers from traditional software engineers, with a focus on "technical humility" - knowing when to trust empirical evidence over engineering intuition. His insights about checking your instincts at the door when working with AI systems offer practical wisdom for anyone building in this space. NVIDIA's Jensen Huang also makes a brief appearance.

Building AI-First Application Architecture

Technologist swyx explores how AI engineering is evolving as a discipline, discussing emerging frameworks for building with AI. The talk examines the development of standard models and approaches for creating effective AI agents. If you're trying to understand where AI development practices are heading and what new frameworks are emerging, this provides a valuable perspective on the field's direction.

Understanding Top-k Sampling in Language Models

Letitia from AI Coffee Break provides a concise explanation of top-k sampling for controlling randomness in language model generation. The video efficiently covers the key concepts without unnecessary complexity, making it a solid primer on this fundamental technique for balancing creativity and coherence in model outputs.

AI-Powered Lighting and Rendering Breakthroughs

Károly from Two Minute Papers covers recent advances in AI-powered lighting and rendering. While most attention focuses on text and images, there's interesting work happening in 3D graphics that deserves more recognition. The video showcases how AI is making sophisticated lighting effects more accessible to creators.

📰 3 Curated Reads

From GPT-2 to gpt-oss: Analyzing the Architectural Advances

Sebastian Raschka methodically dissects architectural changes across model generations in his analysis of OpenAI's first open-weight models since GPT-2. His breakdown reveals six years of evolutionary improvements, from dropout removal to MoE implementations. The piece offers valuable insights into how leading labs actually iterate on transformer designs versus what they publish in papers.

Your Machine Learning Library

Tivadar Danka organized all his posts from The Palindrome into a searchable library covering graph theory, neural networks from scratch, the math of machine learning, and fundamental concepts. Instead of hunting through archives when you need to understand a specific topic, you can jump directly to the relevant explanations. Particularly useful if you're looking for clear mathematical foundations behind ML concepts.

GPT-5 Prompting Guide

https://cookbook.openai.com/examples/gpt-5/gpt-5_prompting_guide

OpenAI's official guidance for working with their latest model, including techniques their own engineers use internally. The guide covers practical patterns and best practices for getting optimal performance from GPT-5's enhanced capabilities, making it more credible than most prompting resources floating around.

🛠 2 Tools / Repos

GenAI Agents

https://github.com/NirDiamant/GenAI_Agents

Cut through the complexity of AI agent development with this hands-on repository. Instead of just theory, you'll find working code for everything from simple chatbots to multi-agent orchestration. It's designed to give you a solid grasp of the fundamental patterns required to build and eventually deploy robust AI agents.

Awesome Generative AI Guide

https://github.com/aishwaryanr/awesome-generative-ai-guide

A curated guide to the generative AI landscape that maintains editorial quality while staying current with rapid field developments. The resource shows clear judgment about what's actually worth exploring, avoiding the common problem of "awesome" lists that become overwhelming collections of links.

🎓 1 Pick of the Week

Claude Code Short Course

https://www.deeplearning.ai/short-courses/claude-code-a-highly-agentic-coding-assistant/

If you've tried Claude Code but found yourself fighting with it more than collaborating, this course will change that. Elie Schoppik shows you how to give Claude the right context so it actually understands your codebase instead of generating generic solutions. The three projects cover real scenarios you'll face: making sense of existing code, turning messy notebooks into proper applications, and building from design mockups. Most importantly, you'll learn how to work with multiple Claude sessions simultaneously without them stepping on each other.

Thanks for reading The Tokenizer! If you found something useful here, share it with someone who might benefit. And if you want more curated insights like this, consider subscribing to Gradient Ascent.