I didn't like the draft I wrote for this edition, so I spent a chunk of time rewriting it. I'm not entirely sure I like this one either, but I don't want to dally further. That's why you're reading this on a Sunday or later (depending on when or if you open this edition).

We're finally at the point of discussing diffusion models. I'll be breaking this topic into smaller digestible parts. A lot goes on under the hood, and it's best consumed in portions. So far, we've seen autoencoders, variational autoencoders, and generative adversarial networks. So why do diffusion models outperform these models and generate mindblowing imagery? How do they work? How are they trained? We'll be answering all these questions over the course of this series. Let's go!

This Week on Gradient Ascent:

The intuition behind diffusion models 🌬️

[Check out] One model to bind them all 💍

[Check out] Google's new LLM on the block 🔡

[Consider reading] GPT on a budget 💸

[Definitely check out] X-Ray a diffusion model 🦴

The Sketch Artist's Guide to Diffusion Models

It's 10 pm, and your phone rings. The chief is on the other end. There's been a crime, and your expertise is needed ASAP. You throw on your jacket, grab your wallet, and rush into the chilly night. At the scene, the local beat cop explains what happened. It was a smash-and-grab. The scene? A local grocery store. The thief was smart enough to disable the surveillance camera before ransacking the store. Without the camera, there are no eyewitnesses.

As a seasoned profiler, your job is to figure out who might have committed this heinous crime. All you have are clues you can gather from the scene. You walk into the store, past glass shards and Swiss chard that have been strewn indiscriminately. Those would have been great in a warm soup. Not the shards, the chard. You look closely at the checkout counter and spot some smudgy fingerprints. The weird part is that these aren't human. Each finger is sharp, pointy, and tapers into a claw. The size of the hand is smaller than a human's. "Curious, curious," you mutter to yourself.

The register has been cleaned out. Walking past the produce aisles towards the dairy section, you notice that a shelf that once contained Twinkies is now empty. In fact, some of them lie open on the floor, half-eaten, with cream stains all around. A closer look reveals two distinct sets of prints. One that matches the hand from the cash register. Another that matches a human's. A picture begins to reveal itself. After scanning every square inch of the store, you further uncover traces of blue-colored fibers, size 12 shoe prints, and a can of black paint.

You meet the sketch artist outside to generate a profile of the suspect. "A man in a blue jacket and a raccoon, wearing a winter hat, size 12 shoes, trending on artstation," you say to the artist. She worries for your sanity. But looking at your serious expression, she gets to work and draws tentative lines and patterns. At first, there's a bit of confusion because her version of the suspects differs from yours. Naturally, transferring over imagination is hard. So, you begin to give more details making it clearer and clearer for her. With each step, the sketch gets better and better. Finally, she gets a really nice illustration of the suspects ready for the detectives. Two weeks later, the detectives caught the suspects. The Twinkies were saved. Thank heavens!

In the machine learning world, diffusion models are like these sketch artists. You describe what you want in words, and they use their "imagination" to create an image that matches your description. As with any sketch artist, getting the image right takes a few tries.

From words to images

Let's look at how these models work from first principles. What would you do if you had to build something that took in a sentence and produced images? What would the parts of such a black box be? First, you'd need something that could understand what the words in the sentence meant. Then, you'd also need something that could use this meaning to put together all the elements in the image. Finally, you'd need something to "paint" this composition and bring it to life. That's exactly what diffusion models consist of.

Before we get technical and jargony, let's give some names for each of these blocks – The first block is a sentence understander, the second is a collage machine, and the third is a color-by-numbers machine. Note that these are just names I'm giving them. If you don't like it, feel free to use anything that catches your fancy.

Later, we'll look at each of these blocks in more detail and how they are trained to do what they do. But, for now, we're using a magic wand and imagining them into existence.

Sentence Understander

Unfortunately, the collage machine doesn't understand English or any other human language we use to build sentences. So, the sentence understander acts like a translator. It takes human language and maps it to numbers. These numbers are called Embeddings—text embeddings, to be exact.

Embeddings help carry over the meaning of the words. For example, the embedding for the word raccoon might be similar to the one for mouse, squirrel, or Guardians of the Galaxy. But, at the same time, it will be very different from the one for bag, helicopter, or pneumonoultramicroscopicsilicovolcanoconiosis.

This is why the collage machine understands these numbers really well. It thus can identify all the things in the sentence description.

Collage Machine

The collage machine takes the output of the sentence understander and puts together a visual map for the color-by-numbers machine. Each embedding from the sentence understander gives clues to the collage machine. It then uses these clues to look up its memory bank and conjure up a visual description of the scene. For example, let's take the description we gave the sketch artist from earlier – "A man in a blue jacket and a raccoon, wearing a winter hat, size 12 shoes, trending on artstation."

The collage machine looks at the embedding for "man" and then checks its memory bank for examples it can use. It then looks at "blue jacket" and associates that this jacket must be what the man was wearing. It then comes to "raccoon," questions the man's life choices, and retrieves examples from its memory. It does this for each embedding until a rich descriptive visual map is built for the color-by-numbers machine.

The collage machine assembles all the pieces of information from its memory bank, just like a sketch artist would use their imagination before drawing. Note that I use "memory bank" and "examples" loosely here. These are technically called "latent representations." We'll look at that in detail later.

So now we have a visual description that the next block can use to "paint" the image we want.

The Color-by-Numbers Machine

Calling it a color-by-numbers machine is a bit of a disservice, but it's the simplest analogy I could think of. This block takes the visual description from the collage machine and paints a picture based on that description. Returning to our example prompt, the color-by-numbers machine knows that the man's jacket is blue. So it paints a man with a blue jacket. It knows that there's a raccoon and paints one next to the man. It knows that either he or the raccoon is wearing a winter hat. The collage machine seems to think it's the man, so the color-by-numbers machine obliges. Thus, it follows the visual description to paint the final image. Tada!

But wait!

If this is all that happens inside the diffusion model, why does it take forever when I give it a prompt?

Also, what does the color-by-numbers machine paint on?

From Noise Thou Art, and Unto Noise Shalt Thou Return

I skipped over a couple of details while explaining the blocks inside diffusion models. The first detail is that diffusion is an iterative process. It doesn't happen in a single step. I mean, it can, but the results would be terrible. Researchers are trying to get to this possibility. Diffusing in one step, I mean. Not the terrible results.

The second detail is diffusion models don't start from a blank canvas. Instead, they begin with random noise. Yes, that's right. Like GANs and VAEs, these models also start with a noisy canvas, except for one thing.

They remove noise from the canvas at each step.

The collage machine takes the noisy canvas and decides how much noise it has to remove from it. Its goal is to bring the resulting "denoised image" on the canvas closer to the description from the sentence understander.

Rome wasn't built in a day. A canvas isn't denoised in a step.

So the collage machine repeats this process until it has a fully denoised version of the sentence. This is what the color-by-numbers machine uses to paint the final picture.

If this isn't clear, think about a sculptor chiseling away at a block of stone. Every part of the stone the sculptor removes is noise. Eventually, what is left behind is the actual image. But how do you remove noise? Easy, through subtraction.

The Art of Subtraction

"Photography is the art of subtraction" ~ me.

Photographers are taught to remove things from the frame while composing. Unlike an artist who adds things to a canvas, photographers remove distracting things like telephone poles or partially obstructing rocks and so on. Diffusion models are similar. They remove distracting noise until the final composition shines through.

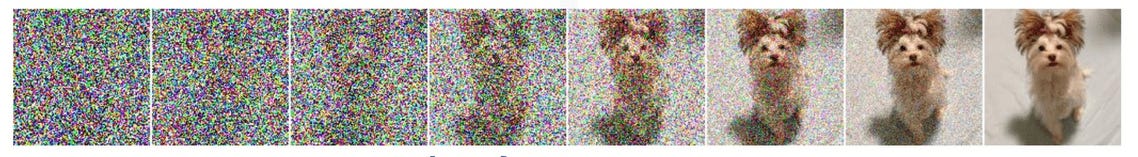

At each step, the collage machine predicts the noise that remains on the canvas. We can then subtract this prediction from the canvas to produce a less noisy image. This image then goes into the collage machine, and it guesses how much noise remains, and we rinse and repeat until we have the final "noiseless image." Here's how that looks over time:

On the left, we have pure noise. As we move towards the right, more and more noise is removed until the final image is produced. It's important to note that the color-by-numbers machine generates each image in the visualization above. On the left, the collage machine hasn't removed any noise, so the color-by-numbers machine has nothing to paint. On the right, however, the collage machine provided an excellent visual description and removed all the noise. Thus the color-by-numbers machine can paint a clear picture. A "noisy description" may not make much sense now, but we'll see what this means later.

So, to summarize: The sentence understander converts the text we provide (a.k.a the prompt) into a machine-understandable format. Next, the collage machine uses this information to build a visual description by removing noise from a canvas. This visual description is then converted into an image by the color-by-numbers machine.

Unsolved Mystery

All of this is fine. But how does the collage machine know how much noise to remove and from where? How does the text understander communicate so effortlessly with the collage machine? How does the color-by-numbers machine know how to use this visual description? Why are these models called diffusion models? Why is the answer to the meaning of life 42?

We'll look at the answers to these questions over the subsequent few editions. But, for now, I hope you understand how diffusion models generate images from text.

P.S.: Remember to lock up your Twinkies.

Resources To Consider:

Meta's Multimodal Masterpiece

Link: https://imagebind.metademolab.com/

Paper: https://facebookresearch.github.io/ImageBind/paper

One model to bind them all. Meta's latest model, ImageBind, combines six different modalities, including images, text, and audio. The link above leads to a demo you can check out and play with. It's mind-boggling that they achieved this without explicit supervision.

Google's PaLM 2

Link: https://blog.google/technology/ai/google-palm-2-ai-large-language-model/

Google's most recent I/O keynote event was almost entirely about AI. Their next-generation language model, PaLM 2, was one of the many announcements. This multilingual model is trained on over 100 languages and can reason and code. Per their announcement, it powers over 25 products and features, including Bard and their answer to, Copilot called Codey!

GPT on a Shoestring Budget

Paper: https://arxiv.org/abs/2305.05176

In this paper from Stanford, the authors detail strategies to reduce the inference costs associated with LLMs. They propose FrugalGPT that learns which combinations of LLMs to use for different queries. Per their paper, they can reduce costs by up to 98% compared to a single LLM (GPT-4) while improving accuracy by 4%. That's worth checking out.

Visual Explanation for Text-to-Image Stable Diffusion

Paper: https://arxiv.org/abs/2305.03509

Since we're learning how diffusion models work, this paper and tool come in at the best possible time. Diffusion Explainer helps us understand how our text prompt gets converted into an image. If you want to X-ray the Stable diffusion model, this tool is for you. Must check out for anyone interested in these models.