GANstruction: Building your own Generative Adversarial Network

A journey from autoencoders through stable diffusion

Before we start, I want to sincerely thank all the kind readers who've signed up to be beta readers. I'll drip-feed drafts over the next few weeks, so keep your eyes open. You folks are awesome!

Welcome to our ongoing series on generative AI. This week, we'll continue our exploration of generative adversarial networks. First, we'll build one ourselves and then look at some of the landmark papers that pushed what GANs could do.

This Week on Gradient Ascent:

Build your own Generative Adversarial Networks (GANs) 👮🦹

[Consider reading] A series of landmark GAN papers 🎨

[Check out] ChatGPT Prompt engineering course 🤖

GANs Unmasked - The Math, Code, and Evolution of AI's Master Forgers

Last week, we explored the intuition behind Generative Adversarial Networks (GANs). In case you missed it, here's a quick recap. A GAN has two networks, the generator, and the discriminator, that are locked in a never-ending game of cat and mouse. The generator produces samples (images, audio, etc.) that it thinks matches real-world examples. The discriminator tries to stay alert and learns to spot the fakes. Each network tries to outsmart the other. Eventually, the generator fools the discriminator. But training two networks is challenging. Let's see how we can do that successfully and without tears.

In this edition, we'll dive deeper into the inner workings of GANs, focusing on the code, equations, and evolution of this fascinating technology. So, let's roll up our sleeves and get our hands dirty with the math and code behind GANs!

Anatomy of a GAN

We’ll use a Deep Convolutional Generative Adversarial Network (DCGAN) as an example to illustrate the various building blocks of a GAN. The DCGAN leverages convolutional layers to generate more realistic images. Here's what each block looks like in code.

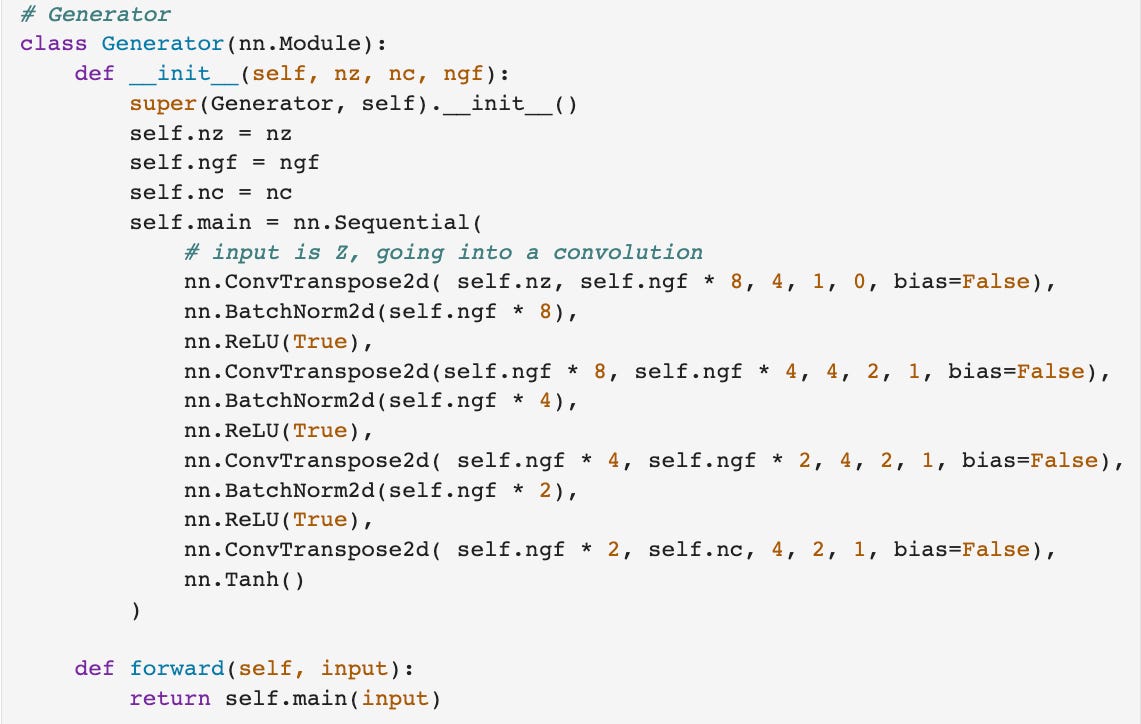

Generator:

The generator's role is to create convincing fake images that can fool the discriminator. It takes random noise as input and transforms it into a realistic image using a series of transposed convolutional layers. Transposed convolutions are used to increase the size of the input. Since we have a small vector that comes in as input, we use these layers to expand it. Note that as this noise vector moves through the network, it is reshaped into an image.

TL;DR Haiku:

Canvas starts as noise,

From chaos, beauty emerges,

GAN's art takes form.

Discriminator:

The discriminator acts as the detective, tasked with identifying whether an image is real or a fake created by the generator. It consists of convolutional layers that analyze the input image and output a probability indicating whether it is real or fake.

TL;DR Haiku:

A keen eye to judge,

Real or fake, truth revealed,

Discerning its game.

Training loop:

The training loop consists of alternating steps in which the discriminator and generator update their weights based on their respective loss functions. Since both networks are trained simultaneously, we must carefully handle these updates. Thus, the training loop can be split into two phases. In the first phase, only the discriminator's weights are updated. In the second, only the generator's weights are updated.

TL;DR Haiku:

Two phases unfold,

Weights refined in careful turns,

Equilibrium.

Discriminator training step

Note how the discriminator (netD below) is given both a set of real examples and fake examples. Over the course of training, it is taught what real examples look like and what fake examples look like. After it makes guesses on both types of examples, it's evaluated on how it did via the loss function (criterion below). Notice that the error is the sum of its performance on the real as well as the fake examples (errD below). The optimizer then updates the weights of the discriminator based on the feedback from the loss function.

Generator training step

The generator is trained in the second phase. During this phase, the discriminator's weights are kept fixed. The generator is evaluated on how well it fooled the discriminator. As before, the optimizer corresponding to the generator updates the generator's weights based on the feedback it gets from the loss function. These two phases happen alternatingly over the training process. Eventually, the generator learns to fool the discriminator.

Equations and Math: GANs Unraveled

Now that we have a basic understanding of the code, let's dive into the math behind GANs. The entirety of training a GAN can be summarized by this menacing-looking equation. Thankfully, I've done a squiggly deconstruction for you so you can understand what it means.

Squiggly Deconstruction:

Follow the colored numbers to understand the math 🙂

This is called a minimax game. The discriminator tries to maximize the probability of correctly guessing which examples are real and which are fake. The generator attempts to minimize the probability that the discriminator guesses that its images are fake. This battle continues until the generator produces examples that fool the discriminator.

Results

Here are the results of training out GAN on the CIFAR-10 dataset. You have some examples of actual images from the dataset on the left. On the right, you have our generator's results after training.

Here's the most interesting part of this whole process.

The generator never saw any images from the actual dataset.

It learned to produce similar images purely from feedback that it got from the discriminator. How mindblowing is that?

Here is the evolution of the generator's artistic prowess. Initially, it starts from random noise, but see how it evolves to produce incredible imagery. Not bad, eh?

GANs have rapidly evolved over the years. The section below lists some seminal papers that made significant strides in generative AI using GANs. I hope that by understanding the code, equations, and critical advancements, you can appreciate the intricacies of this powerful technology and its potential applications. Next time, we'll look into the workings of a modern blockbuster—diffusion models.

P.S.: Here's the entire code for you to play with.

Resources To Consider:

The Evolution of GANs

Since their inception, GANs have evolved significantly, with numerous papers and architectures addressing various challenges and improving their performance. Let's take a brief tour of some of the most important developments over the years.

Original GAN (2014): Ian Goodfellow et al. introduced the concept of Generative Adversarial Networks, laying the foundation for future research in this area.

Link: https://arxiv.org/abs/1406.2661DCGAN (2015): Radford et al. introduced the Deep Convolutional Generative Adversarial Network (DCGAN), which incorporated convolutional layers in both the generator and discriminator, enabling GANs to generate more realistic images.

Link: https://arxiv.org/abs/1511.06434Wasserstein GAN (WGAN, 2017): Arjovsky et al. addressed the issue of training instability and mode collapse by using the Wasserstein distance for better training convergence.

Link: https://arxiv.org/abs/1701.07875Cycle GAN (2017): Zhu et al. introduced the Cycle GAN, which could translate between images.

Link: https://arxiv.org/abs/1703.10593

Progressive GAN (2017): Karras et al. proposed a method to train GANs by progressively increasing the resolution of the images, resulting in high-quality image generation.

Link: https://arxiv.org/abs/1710.10196Spectral Normalization for GANs (2018): Miyato et al. introduced spectral normalization, a technique that stabilizes the training of the discriminator.

Link: https://arxiv.org/abs/1802.05957BigGAN (2018): Brock et al. presented a scalable GAN architecture that generates high-resolution, high-quality images by employing many layers and a hierarchical latent space.

Link: https://arxiv.org/abs/1809.11096StyleGAN (2018) & StyleGAN2 (2019): Karras et al. introduced the StyleGAN architecture, which allows fine-grained control over the generated images' style, followed by StyleGAN2, which improved upon the original architecture by addressing its issues.

Links: https://arxiv.org/abs/1812.04948

https://arxiv.org/abs/1912.04958

Prompt Engineering Course

Link: https://www.deeplearning.ai/short-courses/chatgpt-prompt-engineering-for-developers/

Coursera has released a free ChatGPT prompt engineering course in partnership with OpenAI. Consider taking this course and leveling up your prompting skills to build apps.