The data strikes back: "Practically" Building an Image Classifier - Episode II

Why knowing your dataset matters a lot in machine learning

This Week on Gradient Ascent:

The data strikes back 🤖

Your weekly machine learning doodle 🎨

[Use] Debugging made fun 🐞

[Check out] Write AI-powered Shakespeare plays 🎭

[Watch] Understanding pertaining - the complete guide 📽️

[Consider reading] Sparse models with dense benefits 📜

"Practically" Building an Image Classifier - Episode II:

If you're just joining us and missed last week, here's a recap:

You've joined Petpoo Inc, a pet shampoo company, as one of their first computer vision engineers. Your first assignment is to design a simple pet classifier that distinguishes between cats and dogs. Based on the classifier's results, you can recommend custom shampoos for pets. You receive a dataset containing ~7500 images of cats and dogs from your manager and get to work. Your first experiment without transfer learning seems to confuse dogs with cats a lot. Thinking it to be a case of insufficient data, you use transfer learning and train a second model. This achieves state-of-the-art results. Happy with your work, you leave for home, only to wake up in the middle of the night. There's a big mistake you've made while inspecting the data. Accuracy might be a bad metric to use…

Note: Code for this week's post can be found here

On the next day, you rush to work and check if your intuition was right. While you inspected sample images and calculated the total size of the dataset, in your rush to get results quickly, you forgot to check how many cat and dog images there were. The dataset was originally designed to classify different breeds of dogs and cats. It may have been balanced (similar number of examples for each class) for that task.

You never checked if it had a similar number of images for cats vs dogs. Feverishly typing on your keyboard, the deafening silence that follows the clackety-clack of the keys confirms your suspicions.

Cats: 2400 or 32.4% of the total data

Dogs: 4990 or 67.6% of the total data

That explains why the first model you trained sucked. The data imbalance confused the model. There were more than double the number of dogs as there were cats in the dataset. The model couldn't gather enough information to distinguish cats and dogs because most of the images it saw were dogs. But why did the second model you trained work almost flawlessly? You initialized it with weights that were trained on 1.2+ million images many of which were of dogs and cats.

Problem solved! No worries right? Not exactly. Transfer learning (what you did for the second model) doesn't always work. Transfer learning might fail when there's a domain mismatch between the pretraining dataset (what the model was originally trained on) and the target dataset (what you are training it on). You got lucky because the pretraining dataset had a similar distribution to the pets dataset you were working with (phew!).

Here's the next mistake - Defaulting to accuracy as a metric. Look at the confusion matrices side by side. On the left, you have your original experiment, and on the right, you have the transfer learning experiment. For reference, the rows are the ground truth. The first row corresponds to actual images of dogs, and the second row corresponds to actual images of cats. The first column represents what the model *thinks* are dogs and the second column represents what the model considers to be cats.

If you looked at the raw accuracy numbers, you'd have seen a score of 86% accuracy without transfer learning and a score of 99.7% with transfer learning. Not too bad right?

However, in the confusion matrix on the left, you can see that 44 dogs were classified as cats by the model, and 162 cats were classified as dogs. That's a huge problem you'd have missed by blindly relying on accuracy alone. Why does this happen?

Imagine a worst-case scenario where you only had 90 dog images and 10 cat images and no access to pretrained weights. If you guessed dog as the answer for all 100 images, you'd have an accuracy of 90%. You'd also have a completely useless model.

Thus, on another occasion, using accuracy as a metric would have hidden this fundamental flaw in your model until it was too late. I mean, what if chihuahua totting socialite Haris Pilton had used this, found it useless, and then condemned the product on her social media channels?

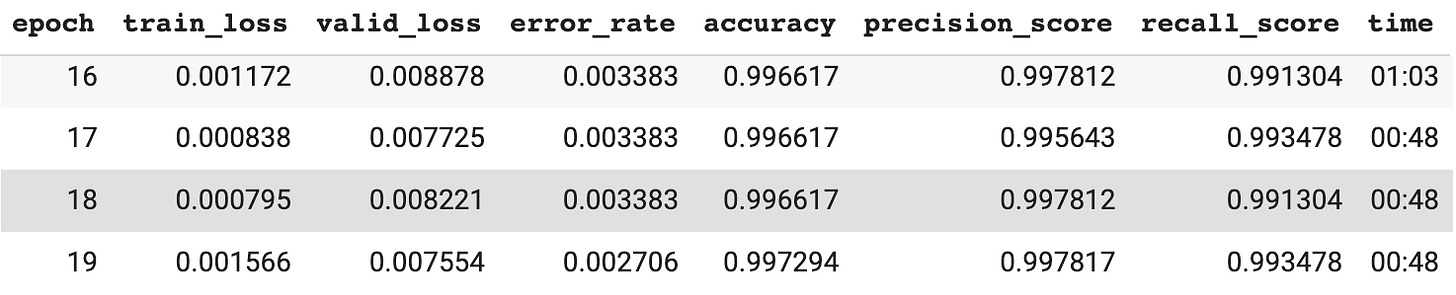

As you thank your lucky stars and consolidate results from transfer learning, your manager comes by. "Hey there! How's your work coming along?", he asks. "Good, I think I've got something.", you reply nervously. You show him your work with transfer learning and the 0.003 error rate. He is floored. "How soon can we build a demo for this?", he asks. "I can start putting one together this week", you respond.

"Our marketing team has connections with top influencers like Haris Pilton, and we'd like for them to try this out and give us feedback.", he grins. "Umm… Ok", you gulp as you think back to your gaffe. "Alright, let's aim for the end of next week. Chop Chop!", he says, patting you on the shoulder, walking away with delight.

The scribble of pen on paper is punctuated by pregnant pauses. Even though you're working on designing the app, your thoughts are elsewhere. What should I have used to evaluate the model? How could I have balanced this wonky dataset? Why did I miss these basic steps?

Over the next few days, you build a simple app interface to test the model and see how it works on images from the real world. After all, what's the point of training a model if you can't use it for practical applications?

Happy with the initial prototype, you divert your attention to the pressing questions in the back of your head. Opening up a reference textbook, you look through F1-score, ROC, precision, and recall, and all those ideas come flooding back. You rerun the experiments, but in addition to measuring accuracy, you also measure precision and recall. The results make way more sense now - The precision and recall numbers are low for the model without transfer learning while the accuracy hides these flaws. On the other hand, precision and recall are really high for the transfer learned model.

You decide to write a checklist of how one should train a model and evaluate it - just in case you or someone else on your team needs a reference in the future.

But before you can start, your manager comes by. You show him the app-in-progress and he's delighted. "This is great! Our marketing team spoke to some of the influencers, and they'd like the app to be even more customized - recommendations per breed. I tried talking them out of it, but they've committed to having that ready when the influencers visit HQ by the end of next week. Think you have that ready by then? I understand this is going to take some hardcore engineering.", he says apologetically. "Granular classification? By the end of next week? That's a tough ask. Can I have another pair of hands to work with me on this?", you ask surprised by the sudden change in requirements and the receding timeline. "Umm…", he pauses...

(The conclusion of this series will drop sometime in 2023 - It's still in pre-production)

Here're my questions for you:

How would you handle this new task? What do you think the best approach is given these constraints? What should you be mindful of?

There are no right answers, only tradeoffs. Drop me a note with your ideas or leave a comment in this post.

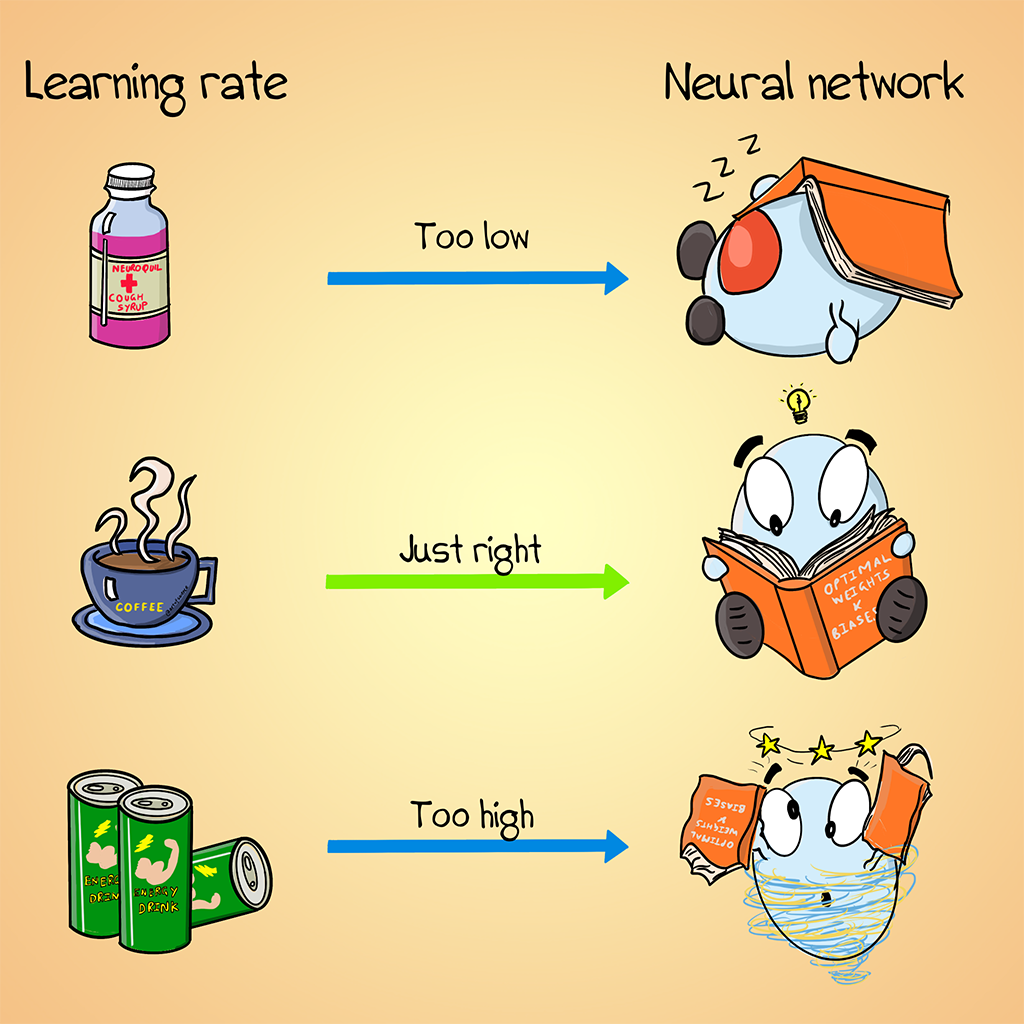

Poorly Drawn Machine Learning:

Learn more about learning rate here

Resources To Consider:

Lovely Tensors - debugging made fun:

Project Page: https://github.com/xl0/lovely-tensors

We've all had our fair share of frustrations debugging neural networks. Deep learning fails silently, and oftentimes, we resort to printing out tensor values to see what's wrong. That gives you a giant pile of goo that you need to sift through and find answers. Wouldn't it be nice if you could quickly get the shape, size, statistics, range, and other information about said tensors instead? That's exactly what Lovely Tensors does. It's going to be a lifesaver the next time you debug Pytorch code.

AI-powered theatre and film scripts

Project Page: https://deepmind.github.io/dramatron/

Dramatron is a new tool for theatre and film writers that I find both useful and hilarious. Experts have long been complaining about using language models to retrieve factual information as they can sometimes make stuff up. Instead of replacing Google as the next search engine, this language model is actually designed to make stuff up. Here is the tweet announcing the launch of the tool by DeepMind.

ICML Workshop on Pretraining

Workshop link: https://icml.cc/virtual/2022/workshop/13457

A great series of videos and lectures from the ICML conference on pretraining. The collection of these topics will give you a great understanding of where research is in pretraining models. Check out the videos in the link above.

Sparse mixture of experts - lower cost + high performance?

Paper: https://arxiv.org/abs/2212.05055

Training large models is increasingly becoming prohibitive both in terms of cost and time. In this paper, the authors recycle some of the trained weights to get impressive results while using only ~50% of the initial sunk cost. Check out this explainer thread from the first author of the paper below: